Nearly half of all internet traffic now comes from non-human sources, with bad bots making up almost one-third of all online traffic. On social media, the numbers are even more striking – research from January 2025 shows that 65 percent of social media bots are considered malicious. These digital actors shape what we see online without most users even knowing they exist.

Recent events have brought this issue into the spotlight. Meta started 2025 with a major controversy when their plans for AI on the platform leaked. Users quickly found old bot personas that had been on the platform since 2023, pointing out errors in their chats, made-up information, and cultural mistakes. Meta has since removed all bot personas, but the damage to trust was already done.

How Bots Hurt Advertisers

For advertisers, this rise in bot traffic creates serious problems. Digital advertisers will spend about $234.14 billion on social media ads in 2024 – a 140% increase from five years ago. This spending works because advertisers can target specific users with their ads. But when bots fill these platforms, that targeting fails.

Click fraud happens when advertisers pay for clicks from fake users or bots rather than real people. While many advertisers have accepted some click fraud as a cost of doing business, the scale has grown too large. Between 2023 and 2028, costs from digital ad fraud will reach an estimated $172 billion.

Small businesses face the biggest challenge as they often lack tools to measure how much of their ad budget goes to waste on bots. If companies can’t trust that social platforms are keeping bots and fake users in check, they may stop advertising there completely.

How To Spot Social Media Bots

Shallow Profiles: Look for generic usernames, low-quality profile pictures or obvious stock photos, and profiles with little personal information.

Poor Writing: Many bots use awkward language with grammar and syntax errors. Bad translations are common.

Odd Timing: Bots often respond instantly to messages or post at unusual hours. They also don’t show a “typing” indicator since they don’t need to type.

Strange Posting Patterns: Watch for accounts that post too much in short time periods or have weird engagement rates (often low because their followers are also bots).

Copy-Paste Content: Malicious bots tend to share the same or very similar content across different accounts or platforms.

If you’re still not sure, online bot detection tools can help spot non-human users.

The Reputation Risk For Brands

The bot issue goes beyond wasted ad dollars. Advertisers worry about their brand appearing next to questionable content. We saw advertisers leave Twitter when it became X – a platform with more bots and less content moderation. Now Meta has cut back on fact-checking while planning to add more AI to the platform, raising fresh concerns for brands.

Bots can directly damage brand reputations, especially for companies with popular social profiles. By linking a brand with scams or unethical practices, bots can ruin consumer trust. For example, they might share fake deals with scam links, making customers lose faith in an otherwise honest brand.

The Privacy Problem

Data privacy adds another layer of concern. It’s not clear how chats between users and bot personas would affect data collection or where this conversation data would end up. This uncertainty could drive users away from platforms that add bot features.

Ultimately, advertisers want to put their money where real users spend time. Social platforms need to keep human users on board and prevent them from moving to competitors they trust more.

Bots and Misinformation

Beyond advertising, bot personas can spread false information widely on social media. We’ve already seen this with bots shaping stories around the 2024 elections and spreading falsehoods about COVID-19.

The threat has grown since Meta stopped fact-checking on its platforms. This change makes it harder to control bots and the false claims they spread.

The Future of Influencers

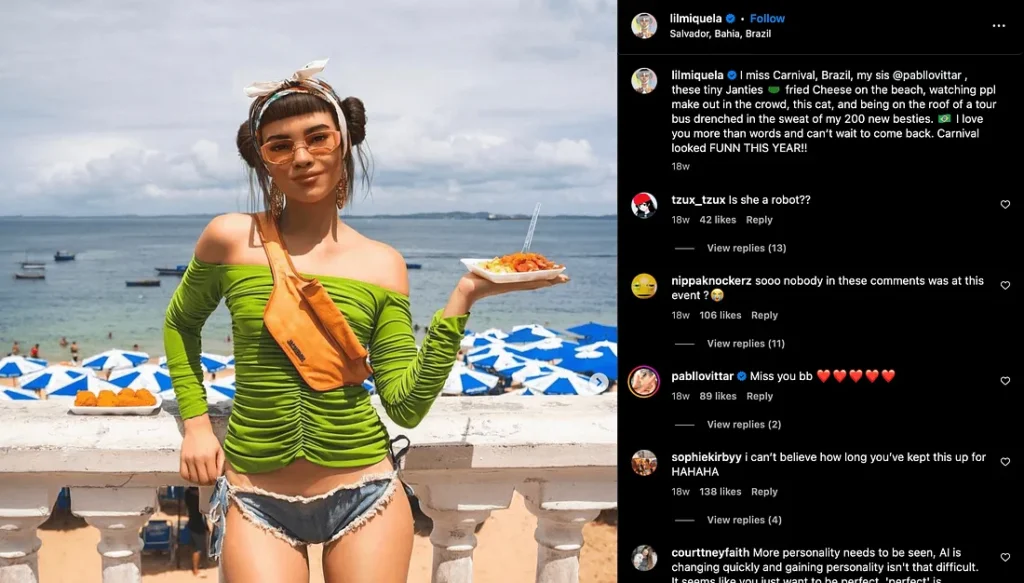

If social platforms embrace bot personas, the $21.1 billion influencer industry will face major changes. AI bot influencers aren’t new – some, like Miquela, are worth millions of dollars. But official bot accounts on platforms like Meta could take this trend much further.

When bots can like or comment on posts, users won’t know which influencers are truly popular and which ones get artificial boosts from the platform itself.

Protecting Yourself From Bots

Stay Alert: Be careful with unexpected messages or posts that seem too good (or bad) to be true.

Report and Block: If you find a bot, report it to the platform and block the account to avoid future contact.

Use Security Features: Turn on two-factor authentication and limit who can send you direct messages. Check your security settings often.

The Need For Transparency

While social platforms will likely keep testing bot features, the tech industry should push for better rules around social media bots. Platforms need to clearly label bot accounts so users can tell them apart from real people.

Without full transparency about how bots interact with content, advertisers won’t feel safe putting their money into social media. Users deserve to know when they’re talking to a machine rather than a person.

As we move deeper into the “age of the bot,” both users and advertisers face a basic question: What’s real on social media? The answer will shape not just our online experience but the future of digital marketing itself.

Think about who – or what – you’re really talking to next time you get an eager response to your latest post. The answer might surprise you.