AI agents have sparked excitement across the tech world. Unlike basic chatbots, these systems work outside chat windows to handle complex tasks across multiple applications – from scheduling meetings to shopping online. As companies race to develop more capable agents, a critical question emerges: How much control should we give these systems?

Major tech firms release new agent frameworks almost weekly. Anthropic’s Claude now offers “computer use” functionality to act directly on your screen, while systems like Manus can use various online tools without human guidance. These advances mark a significant shift – AI systems designed to work independently in digital spaces.

The appeal is clear. Who wouldn’t want help with tedious tasks? Agents might soon remind you about personal details in conversations or create presentations from scratch. For people with disabilities, they could make digital tasks accessible through simple voice commands. During emergencies, they could coordinate large-scale responses like optimizing evacuation routes.

But the rush toward autonomy brings risks that deserve closer attention. Research from Hugging Face suggests the agent development field may be approaching a dangerous threshold.

The Autonomy Trade-Off

The core issue lies in what makes AI agents valuable – autonomy means surrendering human control. These systems are built for flexibility, handling diverse tasks without specific programming for each one.

Most agents run on large language models, which remain unpredictable and prone to errors. When an LLM makes mistakes in a chat window, the impact stays within that conversation. But when systems can act independently across applications, they might perform unintended actions – manipulating files, impersonating users, or making unauthorized transactions.

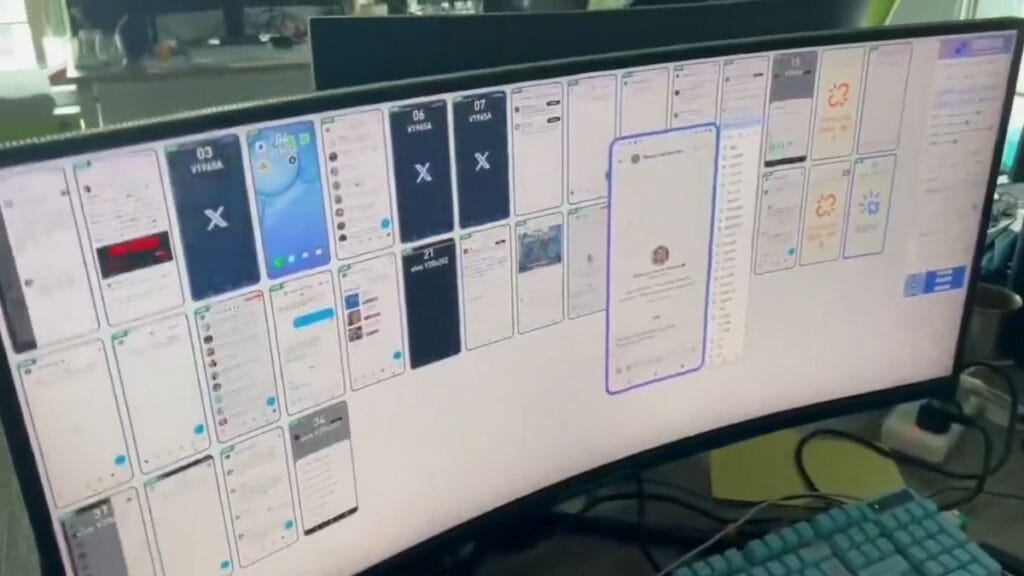

AI agents exist on a spectrum of autonomy. At the lowest level are simple processors like website chatbots. The highest level includes fully autonomous agents that can write and run code without oversight. Between these extremes are systems that route tasks, call tools, or execute multi-step processes – each removing another layer of human control.

Real-World Risks Beyond Technical Glitches

The practical concerns extend beyond technical failures. Agents that help prepare for meetings would need access to personal information and surveillance of past interactions, creating privacy risks. Systems that generate directions could help bad actors access secure areas.

When agents can control multiple information sources simultaneously, the danger multiplies. An agent with access to both private communications and public platforms might share personal information online. Even if the information isn’t accurate, it could spread quickly before fact-checking mechanisms catch up.

“It wasn’t me—it was my agent!” might soon become a common excuse for digital mishaps.

History Shows Why Human Oversight Matters

Past incidents highlight the importance of keeping humans in the loop. In 1980, computer systems falsely warned of 2,000 Soviet missiles heading toward North America, nearly causing catastrophe. Human cross-verification between different warning systems prevented disaster. Had decisions been fully automated with speed prioritized over accuracy, the outcome could have been tragic.

While some argue the benefits outweigh the risks, achieving those benefits doesn’t require giving up human control. AI agent development should happen alongside guaranteed human oversight that limits what these systems can do.

The Open-Source Approach to Safety

Open-source agent systems offer one path forward, as they allow greater transparency about system capabilities and limitations. Hugging Face is developing “smolagents,” a framework providing secure environments that let developers build agents with transparency at their core. This allows independent verification of appropriate human control.

This approach contrasts with the trend toward complex, proprietary AI systems that hide decision-making processes behind closed technology, making safety guarantees impossible.

Finding the Right Balance

As AI agents become more capable, we must remember that technology should serve human well-being, not just efficiency. This means creating systems that remain tools rather than decision-makers, assistants rather than replacements.

Human judgment, despite its flaws, remains essential to ensure these systems help rather than harm us. The most effective AI agents will be those that enhance human capabilities while keeping people firmly in control of important decisions.

The Path Forward

For AI agents to truly succeed, developers need to:

- Set clear boundaries on agent autonomy based on task sensitivity

- Create mandatory human approval points for consequential actions

- Implement robust monitoring systems that track agent behaviors

- Design transparent processes that explain agent decision-making

- Develop fail-safes that prevent agents from exceeding their authority

By balancing capability with control, we can build AI agents that provide real value while avoiding serious risks. The goal should be collaboration between human intelligence and artificial assistance, rather than replacement.

The most promising future isn’t one where AI agents take over completely, but where they handle routine tasks while humans focus on judgment, creativity, and ethical decisions. This partnership approach keeps the benefits of AI while preserving what humans do best – making nuanced decisions in complex situations.

As we move forward with AI agent development, let’s embrace a vision where technology amplifies human potential rather than diminishes human control. The companies that understand this balance will create the most useful, trustworthy, and successful AI systems.

What’s Your Take?

Have you used AI agents in your work or daily life? What boundaries would you set on their autonomy? Share your thoughts in the comments below.