Google’s annual developer event has wrapped up, and the tech giant has made its vision clear: AI is moving from text-based chats to fast, multimodal experiences that blend into your daily life. Let’s look at what this shift means for you, whether you build apps, run a business, or create content.

The New Speed of AI: Why Real-Time Matters

Google’s new Project Astra sits at the heart of their AI shift. While most coverage focused on the tech specs, the real story is how these speed improvements change what AI can do for you.

When AI responds in real time, it stops being a tool you use and starts being a partner that works alongside you. The most clear example of this is Gemini Live’s new camera and screen-sharing features rolling out this week on iOS and Android. Now your AI assistant can see what you see and help right when you need it.

For business users, this means getting answers while walking through a store, reviewing products on the fly, or getting help during live client meetings. For developers, it means AI can watch as you code and spot errors before you even finish typing.

Multimodal: The End of Single-Format AI

Google’s Gemini 2.5 Pro now handles text, images, audio, and video at once. But what’s often missed is how this changes the AI workflow.

Rather than treating AI as separate tools for different media types, the new systems let you work with all formats at once. The most forward-looking example is Flow, Google’s new video tool that uses both Veo 3 for video and Imagen 4 for images to help you create and edit content without switching between apps.

For content teams, this means you could start with a rough idea, ask Gemini to help you storyboard it with Imagen 4, then bring those storyboards to life with Veo 3, all while keeping the same creative direction. The tool can even add fitting sound effects and dialog to match your vision.

From Simple Tools to Smart Agents

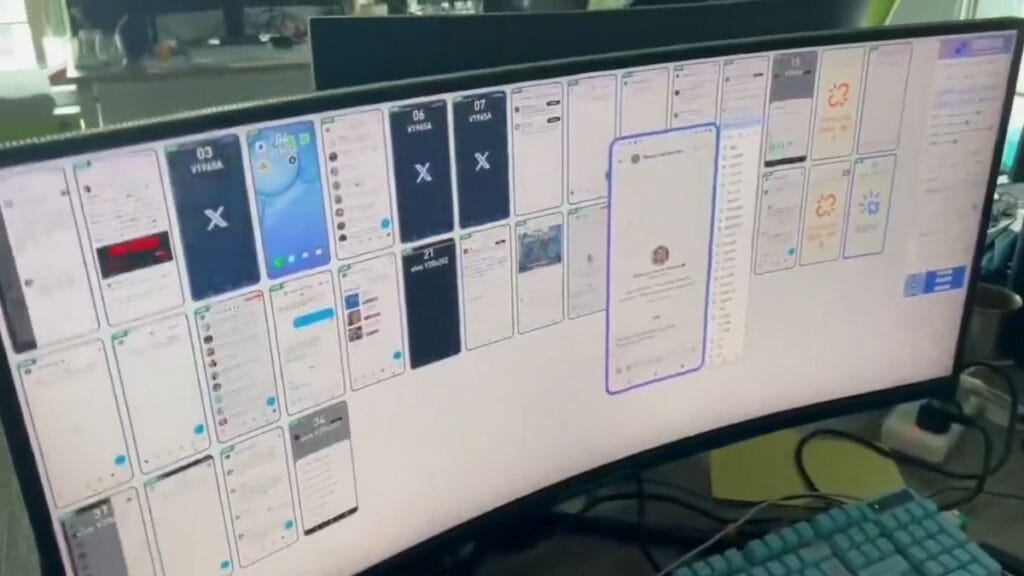

Project Mariner shows where Google is heading next with AI. While news outlets covered the basic features, they missed the bigger picture: Google is moving from tools that respond to your prompts to agents that can take action on your behalf.

The updated Project Mariner can now handle nearly a dozen tasks at once, visiting websites and taking actions for you. This isn’t just about saving time – it changes what’s possible for businesses and developers.

For small business owners, this means AI can handle mundane tasks like checking competitor prices, booking travel, or researching vendors, all while you focus on more important work. For developers, tools like Jules can now fix bugs and create GitHub pull requests with less oversight.

The Price of Progress

Google’s new AI Ultra plan at $249.99 per month makes one thing clear: the best AI still costs money. But what matters more than the price is what it reveals about Google’s business model.

Google is betting that power users will pay for top-tier AI. This includes access to Veo 3, Flow, and the upcoming Gemini 2.5 Pro Deep Think mode. By charging for these features, Google can fund the high computing costs that these systems need.

For businesses, this tiered approach lets you match AI costs to your needs. Teams that need advanced AI can use the premium tools, while others can stick with the free or lower-cost options.

Deep Think: The Next Step in AI Reasoning

The most overlooked announcement might be Deep Think, Google’s new reasoning mode for Gemini 2.5 Pro. While Google shared few details, this feature lets the AI weigh multiple answers before giving you its best response.

For complex problems, this means more reliable answers. If you’re using AI to help with product planning, financial analysis, or strategic decisions, Deep Think could help you get better results than the quick responses most AI gives today.

The fact that Google is still safety testing Deep Think also shows they’re concerned about risks with more powerful AI systems. Expect to see more safeguards as AI tools get better at reasoning.

Beam: The Future of Remote Work

Google’s 3D teleconferencing platform Beam (formerly Starline) shows how AI might change remote work. Using a six-camera array and custom display, Beam makes it feel like your remote team is in the room with you.

What sets this apart from past attempts at virtual meetings is the AI-powered head tracking and real-time translation that keeps the speaker’s voice and tone intact. This breaks down the barriers that make remote meetings feel flat and lifeless.

For global teams, this means better communication across language barriers. For client meetings, it means building trust through more natural interactions, even when you can’t meet in person.

New Ways to Build Apps

Stitch, Google’s new AI-powered tool for app and web design, lets you build interfaces using just words or images. While the tool is more limited than some competitors, it shows how AI is changing app development.

What matters most about Stitch is that it bridges the gap between idea and code. Business users can mock up what they want, and Stitch can generate the HTML and CSS to make it real. This could speed up the design process and help teams test ideas faster.

For product teams, this means quicker prototyping and fewer gaps between what was planned and what gets built. For developers, it means spending less time on basic UI work and more time on complex features.

AI in Chrome: The Browser Gets Smarter

Google is bringing Gemini directly into Chrome, turning your browser into a smart assistant that understands what you’re looking at. The AI can help you make sense of web pages and finish tasks faster.

This moves AI from a separate tool to part of your browsing experience. For business users, this could mean getting quick insights while researching, comparing products more easily, or filling out forms with less typing.

For content creators, it means your readers might interact with your content in new ways, asking questions and getting answers without leaving the page.

What This Means for Developers

If you build apps or websites, Google I/O 2025 signals big changes:

The new Gemma 3n model runs on phones and tablets, opening up new ways to use AI without sending data to the cloud. This means faster responses and better privacy for your users.

Android Studio’s new AI features, like Journeys and Agent Mode, can take on more complex development tasks. The crash insights feature can even scan your code to find what’s causing problems.

The Play Store updates give you better ways to handle subscriptions and now let you stop live app releases if problems come up. The new topic browse pages also help users find apps related to their interests.

What This Means for Content Creators

For those who make videos, write, or create other content, Google’s AI tools offer new possibilities:

Flow lets you create and edit videos with AI, saving time on routine edits while focusing on creative decisions.

NotebookLM’s new Video Overviews feature can help you research and organize information from video sources.

SynthID Detector gives you a way to check if content was made by AI, helping you tell real from fake.

What This Means for Business Users

If you run a business or team, Google’s AI updates could change how you work:

Gmail’s new smart replies and inbox-cleaning features can save time on email.

Google Meet’s real-time translation keeps your voice and tone intact while breaking down language barriers.

Project Mariner can handle online tasks for you, from booking travel to comparing products.

The Path Forward

Google I/O 2025 shows AI moving from simple chatbots to smart systems that can see, hear, and act in the world. The best AI tools now work across different media types, respond in real time, and can take action on your behalf.

For most users, the biggest change will be how AI fits into daily work. Rather than visiting an AI tool when you need help, AI will be part of the apps you already use, ready to help when you ask.

As these tools get better, think about how they might fit into your work or business. The companies that adapt first will have an edge as AI becomes a standard part of how we work.

What AI tools are you most excited to try? Start small with one task you do often, and see if AI can help you do it better or faster.