Google’s new Gemini Diffusion model represents more than just another fast AI tool. It shows us a different way to think about how machines create text and code. While most people focus on its speed, the real story lies in how it processes information and what this means for developers and businesses.

The Technical Shift That Matters

Most language models work like we do when we write. They predict one word, then use that word to predict the next one, creating a chain of decisions. This approach, called autoregressive generation, has one major problem. Once the model picks a word, it can’t go back and fix it if later context shows the choice was wrong.

Gemini Diffusion works differently. It starts with random noise and slowly shapes the entire response at once. Think of it like a sculptor working on marble. Instead of carving from left to right, the sculptor works on the whole statue at the same time, refining details with each pass.

This parallel approach lets the model see the whole picture while it works. If it notices an error in one part, it can fix it without breaking the flow. Traditional models get stuck with early mistakes because they build responses word by word.

Why Speed Matters More Than You Think

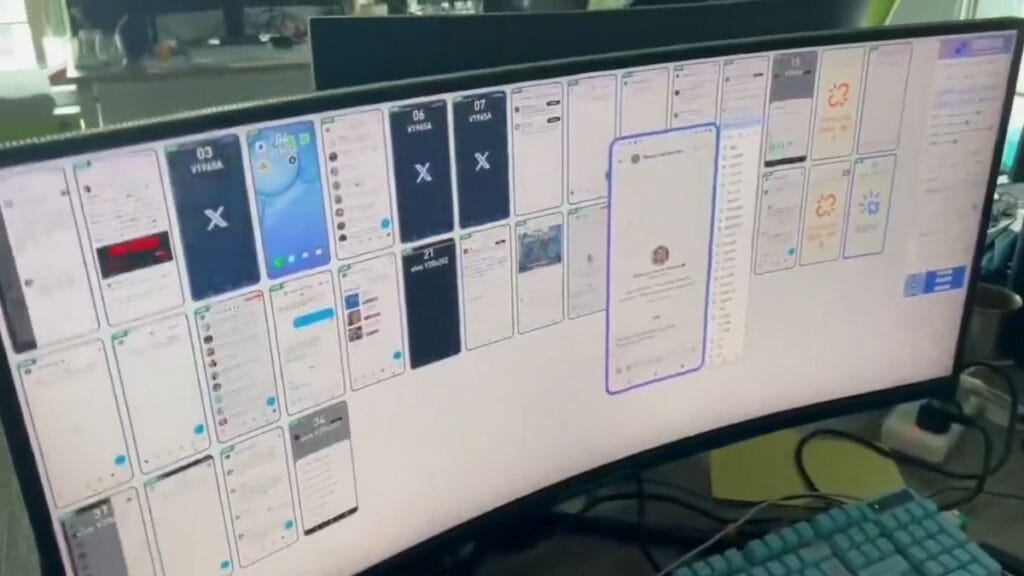

The demo shows Gemini Diffusion creating apps in seconds and generating 1,300 tokens per second. But speed isn’t just about impatience. It changes how you can work with AI.

Fast generation means you can iterate quickly. Instead of waiting 30 seconds for a response, then finding errors and waiting another 30 seconds for fixes, you get results in 2-3 seconds. This speed lets you test ideas, spot problems, and refine solutions in real time.

For developers, this changes the entire workflow. You can treat AI generation more like a live coding assistant rather than a batch process. The model generates HTML games, animations, and interactive elements fast enough to feel responsive.

Real Applications for Business Workflows

The speed and correction abilities open up new use cases that weren’t practical before.

Rapid Prototyping Teams can generate multiple versions of interfaces, animations, or small applications in minutes rather than hours. The model creates functional HTML/CSS/JavaScript combinations that work immediately. Product managers can test concepts with stakeholders while they wait.

Iterative Development The correction mechanism means fewer broken generations. Traditional models often produce code that looks right but has subtle errors. Diffusion models can catch and fix these issues during generation, reducing debugging time.

Content Localization The model translated content into 40 languages in seconds. For marketing teams managing global campaigns, this speed makes it practical to generate and test localized content variations quickly.

Educational Tools Teachers and trainers can create interactive examples and simulations on demand. The model generates working code for concepts like physics simulations or data visualizations fast enough to use during live presentations.

The Coherence Advantage

Traditional models sometimes lose track of what they’re doing in long responses. They might start writing about one topic and drift to another, or create code that works in parts but fails as a whole.

Diffusion models maintain better coherence because they process the entire response simultaneously. The model can ensure that the beginning, middle, and end all work together. This matters most for complex tasks like creating applications or writing technical documentation.

Implementation Strategies for Teams

Start Small Begin with simple tasks that need quick iterations. Use the model for generating UI mockups, simple games, or interactive examples. Build confidence with the tool before tackling complex projects.

Focus on Speed-Critical Tasks Identify workflows where waiting for AI responses creates bottlenecks. Content creation, rapid prototyping, and idea testing benefit most from fast generation.

Build Correction Workflows Take advantage of the model’s ability to fix itself. Create prompts that ask for improvements or corrections to existing outputs. The speed makes this back-and-forth practical.

Combine with Traditional Models Use diffusion models for rapid generation and traditional models for complex reasoning. This hybrid approach gets you speed when you need it and depth when the task requires it.

What This Signals About AI Development

The success of text diffusion models suggests we might see similar approaches in other areas. The core insight is that parallel processing with iterative refinement often works better than sequential prediction.

We already see this pattern in image generation, where diffusion models dominate. Audio generation is starting to adopt similar approaches. The pattern might extend to code compilation, data analysis, and other tasks that traditionally work step by step.

This shift also suggests that AI development might move away from bigger models that do everything toward specialized models that excel at specific types of processing. Diffusion models for generation, transformer models for reasoning, and other architectures for different tasks.

The Current Limitations

Gemini Diffusion isn’t as capable as advanced models like Claude or GPT-4 for complex reasoning tasks. It excels at generation but struggles with deep analysis or complex problem-solving.

The model also has inconsistent behavior. Sometimes it refuses simple requests, other times it handles complex ones easily. This unpredictability makes it better suited for experimentation than production use right now.

The focus on speed sometimes comes at the cost of accuracy. While the model can fix some errors during generation, it might miss others that a slower, more careful approach would catch.

Diffusion is the way?

Diffusion models for text generation represent a new direction rather than just an improvement. They show us that the way we generate text doesn’t have to mirror how humans write.

The real question isn’t whether diffusion models will replace traditional language models, but how they’ll work together. Fast generation for ideation and prototyping, careful reasoning for analysis and planning.

For now, Gemini Diffusion offers a glimpse of what’s possible when we rethink the basics of text generation. The speed and coherence improvements suggest this approach has room to grow.

If you work with AI regularly, try to get access and experiment with it. Focus on tasks where speed matters more than perfection. See how the different approach changes your workflow and what new possibilities it opens up.