In a web world where AI companies hungrily scrape content to train their models, website owners now have a clever new defense. Cloudflare’s AI Labyrinth doesn’t just block bots – it tricks them with fake content, wasting their resources while keeping your real data safe. This fresh approach might change how we protect digital content in the age of AI.

Why AI Scraping Has Become a Major Problem

AI systems need massive amounts of content to learn from. This has led to widespread scraping of websites, often without permission. Studies show that AI chatbots like ChatGPT and Perplexity still access content from sites that explicitly block their crawlers.

According to Cloudflare, these crawlers generate more than 50 billion requests to their network daily – about 1% of all web traffic they handle. This isn’t just an ethical issue; it’s a server load issue that costs site owners money and bandwidth.

For publishers, artists, and content creators, watching AI companies take their work without consent to build profitable products has sparked outrage. The simple “robots.txt” approach to blocking crawlers has proven ineffective as many AI companies simply ignore these directives.

How AI Labyrinth Works: A Technical Look

Rather than giving crawlers a “403 Forbidden” error, AI Labyrinth sends them down a rabbit hole of AI-generated content that looks real but isn’t related to your site’s actual content. This serves two purposes:

- It protects your real content from being scraped

- It wastes the crawler’s computational resources

The tool uses pre-generated synthetic content stored in Cloudflare’s R2 storage system. When a crawler is detected, the system serves these fake pages instead of your real content. The pages are linked together, creating a maze that can waste significant crawler resources.

What makes this approach smart is that human visitors never see these fake pages – they’re invisible to normal site navigation and don’t affect your SEO or site structure.

Beyond Simple Blocking: The Strategic Advantage

Traditional bot-blocking tools alert scrapers that they’ve been detected, which often leads to an arms race as scrapers adapt their methods. AI Labyrinth takes a different approach by letting crawlers think they’ve successfully gathered content.

This creates several advantages:

- Scrapers waste resources processing useless data

- Your real content stays protected

- The crawler might not realize it’s been fooled

- The system acts as a honeypot to identify new scraping methods

This last point is particularly valuable. By monitoring which entities go “four links deep into a maze of AI-generated nonsense,” Cloudflare can identify new crawlers and improve their detection systems.

Who Needs This Tool Most?

AI Labyrinth offers the most value to:

News Publishers: Outlets have found their content misrepresented by AI systems trained on their work. This tool helps prevent misinformation spread while protecting original reporting.

Creative Professionals: Artists, photographers, and writers whose work is often scraped to train AI systems that then compete with them.

Technical Documentation Sites: Companies with valuable technical information that shouldn’t be repurposed without proper attribution.

E-commerce Sites: Product descriptions and specifications are prime targets for AI training data.

For small site owners, the tool offers protection without requiring complex technical knowledge. Since it’s available on Cloudflare’s free tier, it puts powerful protection within reach of individual creators and small businesses.

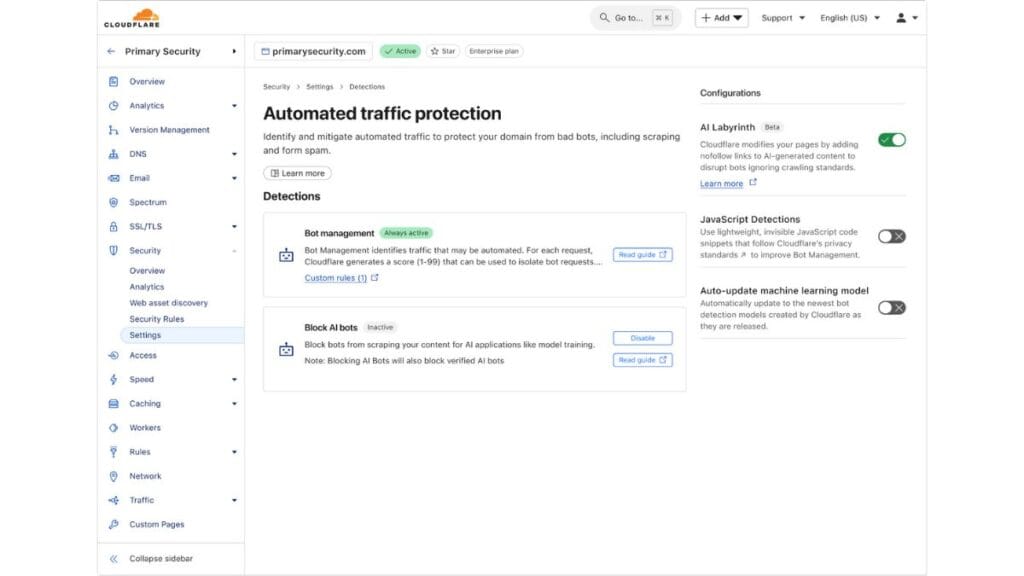

Setting Up AI Labyrinth: A Quick Guide

Enabling AI Labyrinth is straightforward:

- Log into your Cloudflare dashboard

- Navigate to Security > Bot Management

- Find the AI Labyrinth toggle and switch it on

No special rules or configurations are needed. The system automatically deploys when it detects unauthorized bot activity.

The Ethical Angle: Fighting AI With AI

Cloudflare made a point of ensuring their tool doesn’t add to the internet’s misinformation problem. The fake content is based on scientific facts – just not relevant to the site being protected.

This creates an interesting scenario where AI is used to protect against AI, but in a way that aims to limit harm to the broader web ecosystem. Rather than flooding the internet with nonsense, it creates targeted fake content only shown to scrapers.

What This Means for the Future of Web Content

AI Labyrinth signals a shift in how we think about content protection. Instead of just building higher walls, we’re now creating clever traps for those who try to climb over them.

This approach might start a new phase in the relationship between content creators and AI companies. If enough sites adopt tools like AI Labyrinth, it could force AI companies to negotiate fair terms with content creators rather than simply taking what they want.

For web developers and site owners, this points to a future where content protection becomes more sophisticated. Rather than just blocking access, we may see more tools that provide misleading or useless data to unauthorized scrapers.

What AI Labyrinth Doesn’t Solve

While AI Labyrinth offers a clever solution, it doesn’t address all content protection issues:

- It works only on sites using Cloudflare

- It can’t help with content that’s already been scraped

- AI companies with enough resources might still find ways around it

- It doesn’t establish clear legal precedents about content rights

The tool also raises questions about detection accuracy. False positives could potentially send legitimate crawlers like Google into the labyrinth, affecting search rankings.

What You Should Do Next

If you’re concerned about AI systems using your content without permission, consider:

- Enabling AI Labyrinth if you use Cloudflare

- Adding clear terms of service on your site about AI scraping

- Monitoring your server logs for unusual crawling patterns

- Joining industry groups pushing for clearer AI training data regulations

The battle for control over web content will likely intensify as AI systems grow more prevalent. Tools like AI Labyrinth give content creators a new weapon in their arsenal, but the war is far from over.

As AI companies and content creators continue this technological arms race, staying informed about new protection methods will be essential for anyone who values their digital content.

What do you think about using AI to fight against unauthorized AI scraping? Have you tried AI Labyrinth or similar tools? Share your experiences or ask questions in the comments.