Sam Altman, the face of OpenAI, took the stage at TED2025 to discuss the present and future of artificial intelligence. He discussed a deeper story about power, control, and the fundamental shifts AI is creating in our society. Let’s look at what Altman actually revealed between the lines.

The Shift from Chatbots to Agents: What This Actually Means

One of the most significant revelations from Altman’s interview was OpenAI’s clear pivot toward agentic AI. The demonstration of “Operator,” which can perform tasks like restaurant reservations, represents more than just a convenient feature—it signals a fundamental shift in how AI will interact with our digital and physical worlds.

What makes this shift particularly important is that agentic AI doesn’t just respond to prompts—it takes action. When asked about the safety implications, Altman acknowledged this represents “the most interesting and consequential safety challenge we have yet faced.”

For businesses and developers, this shift means:

- Security teams need entirely new frameworks for managing AI that can act on behalf of users

- API providers must prepare for a wave of AI-driven traffic that mimics human behavior

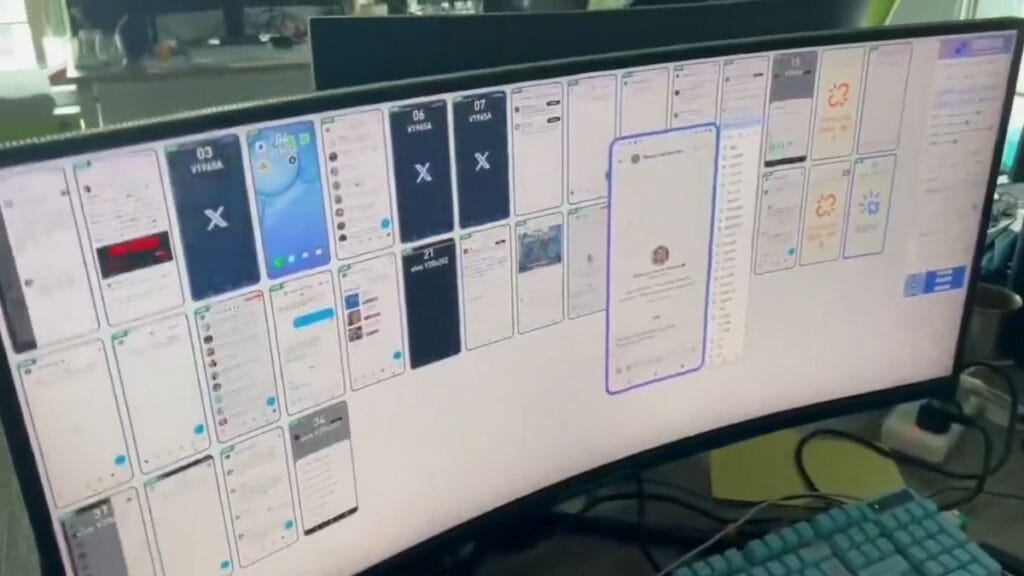

- User experience designers must rethink interfaces that will increasingly be navigated by AI rather than humans

Altman believes people will be “slow to get comfortable with agentic AI,” comparing it to the early days of putting credit card information online. However, this comparison understates the autonomy these systems will have. Credit card transactions follow strict protocols, while agentic AI will make judgment calls about what actions to take on our behalf.

The Reality Behind OpenAI’s Growth

Perhaps the most startling moment in the interview came when Altman casually mentioned that ChatGPT has approximately 500 million weekly active users—and is “growing very rapidly.” For context, this puts ChatGPT’s usage on par with platforms like Instagram and Twitter (now X), which took many years to reach similar numbers.

This meteoric growth creates several underexplored realities:

- OpenAI is rapidly becoming one of the most influential companies in the world while maintaining a governance structure that’s neither fully profit-driven nor truly open

- The data from these interactions is creating a feedback loop that strengthens OpenAI’s models ahead of competitors

- Infrastructure demands for this scale of operation are staggering, creating dependencies on partners like Microsoft

When asked about open-source competition like DeepSeek, Altman said: “I call people and beg them to give us their GPUs.” This admission reveals the hardware constraints that even the most well-funded AI companies face, suggesting that access to computational resources, not just algorithms, will determine AI leadership.

The Memory Feature: The Beginning of AI Personalization at Scale

Altman highlighted OpenAI’s enhanced memory feature, where “this model will get to know you over the course of your lifetime.” He likened it to a gradual process: “It’s not that you plug your brain in one day, but… someday maybe if you want it’ll be listening to you throughout the day and sort of observing what you’re doing.”

This vision of AI has profound implications:

- AI systems will evolve from general-purpose tools to deeply personalized companions

- The value of your AI will increase with usage, creating potential lock-in effects

- Privacy boundaries will shift as AI requires more personal data to be effective

The comparison to the movie “Her” wasn’t lost on the audience, with Altman acknowledging that future AI systems might proactively help users rather than just responding to queries. This transition from reactive to proactive AI represents a significant shift in human-computer interaction that most users aren’t prepared for.

The Science and Software Development Angle

When asked what excites him most about upcoming developments, Altman pointed to “AI for science” as his personal focus. He suggested that we might see “meaningful progress against disease with AI-assisted tools” and advances in areas like physics.

For software development, Altman predicted “another move that big in the coming months as software engineering really starts to happen.” This suggests that current code assistance tools are just the beginning, with fully automated software engineering on the horizon.

For technical professionals, this means:

- Scientific researchers should prepare for AI tools that can propose experiments and analyze results

- Software development workflows will undergo another massive transformation

- The skills valued in technical fields will shift toward prompt engineering and system design

The Safety Contradiction

Throughout the interview, a contradiction emerged in Altman’s position on safety. On one hand, he emphasized OpenAI’s commitment to safety, citing their “preparedness framework.” On the other, he revealed a significant shift in their approach to content moderation.

“We’ve given users much more freedom on what we would traditionally think about as speech harms,” Altman stated, adding that they’re “taking a much more permissive stance now.” This represents a notable pivot from OpenAI’s previous conservative approach to content generation.

Altman’s justification for this shift was particularly telling: “We couldn’t point to real-world harm.” This signals that OpenAI may be moving away from preemptive restrictions toward a more reactive approach based on demonstrated harms.

For content creators and businesses using AI tools, this means:

- Expect fewer restrictions on creative content generation

- Be prepared for more responsibility in self-moderating AI outputs

- Watch for potential backlash if this more permissive approach leads to controversial content

The Tension Between Elites and Users

One of the most revealing moments came when Altman was asked about participating in a summit of ethicists and technologists to establish safety guidelines. His response: “I’m much more interested in what our hundreds of millions of users want as a whole.”

This statement positions OpenAI as more responsive to user preferences than expert opinions, which Altman characterized as decisions made “in small elite summits.” However, this populist framing overlooks the reality that OpenAI itself makes crucial decisions about capabilities and limitations, often without direct user input.

The tension between expert guidance and user preference will shape AI development in coming years, with companies like OpenAI potentially using user preferences to justify controversial decisions while still maintaining ultimate control over their systems.

The Parental Perspective

In a more personal moment, Altman discussed how becoming a parent has changed his perspective. While he claimed it hasn’t fundamentally altered his views on AI safety (“I really cared about like not destroying the world before, I really care about it now”), he admitted parenthood has changed how he allocates his time.

This glimpse into Altman’s personal life reveals an important dimension of AI leadership that’s rarely discussed: how the personal lives and values of a small group of leaders influence decisions that affect billions of people. As AI becomes more integrated into society, the values and priorities of those controlling these systems will have outsized impact.

The Future According to Altman

Altman’s vision for his child’s future revealed his ultimate optimism about AI: “My kids hopefully will never be smarter than AI. They will never grow up in a world where products and services are not incredibly smart, incredibly capable… It’ll be a world of incredible material abundance.”

This vision assumes AI development will continue largely unimpeded, resulting in tremendous benefits for humanity. Notably absent was discussion of potential downsides like job displacement, increased inequality, or loss of human agency—issues that many experts consider significant risks of advanced AI.

What This Means For Your Work

For professionals across industries, Altman’s interview signals several key developments to prepare for:

- Agentic AI will transform workflows – Begin identifying processes where AI agents could take over routine tasks

- The memory feature will create new personalization opportunities – Consider how your products or services might leverage AI that knows users deeply

- Content restrictions are loosening – Expect more creative freedom with AI tools, but also more responsibility for outputs

- Open source AI is coming – Prepare for a world where powerful AI models are widely available beyond major companies

The pace of AI development shows no signs of slowing, with Altman comparing it to an “unbelievable exponential curve.” Whether this is cause for excitement or concern largely depends on your perspective on who benefits from and controls these technologies.

As AI capabilities continue to grow, the most important question might not be what these systems can do, but who decides how they’re used and for whose benefit. That question remains largely unanswered, even as companies like OpenAI race toward ever more powerful systems.