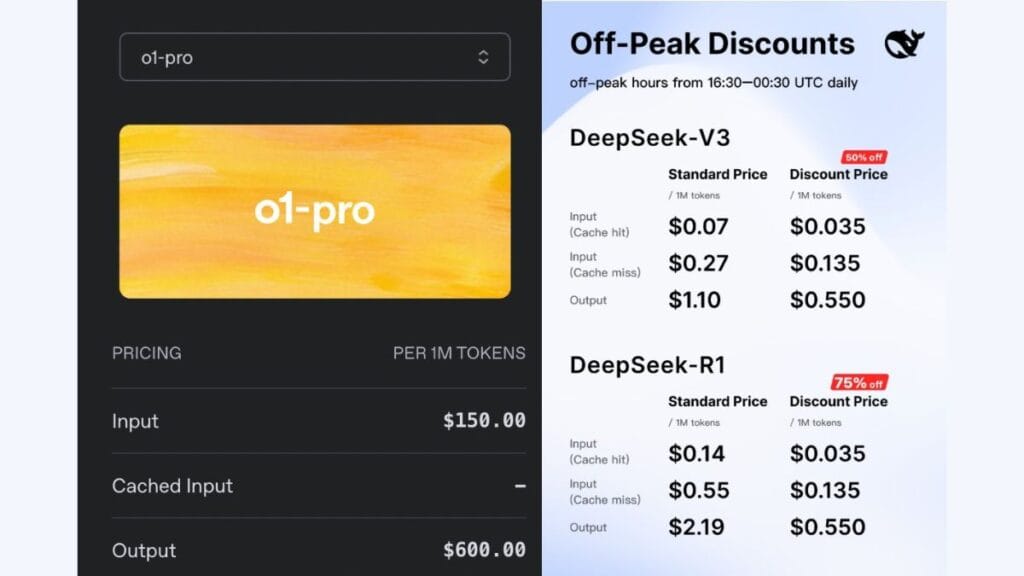

OpenAI recently added o1-pro to its API lineup, marking a significant step in the company’s pricing strategy with costs that have raised eyebrows across the developer community. At $150 per million input tokens and $600 per million output tokens, this new model represents OpenAI’s most expensive offering to date—twice the price of GPT-4.5 for inputs and ten times costlier than the standard o1 model.

But what does this premium pricing mean for developers, businesses, and the broader AI industry? Let’s look beyond the sticker shock to understand the practical implications and strategic considerations of this high-end AI option.

The Price-Performance Question

The fundamental issue with o1-pro isn’t just its cost, but whether that cost delivers proportional value. OpenAI claims the model “uses more compute to think harder and provide even better answers to the hardest problems.” However, early user impressions from the ChatGPT Pro subscription (where o1-pro has been available since December) tell a more mixed story.

Reports show the model struggling with tasks like Sudoku puzzles and simple optical illusions. Even OpenAI’s internal benchmarks suggested o1-pro performed only marginally better than standard o1 on coding and math problems, though with greater reliability.

This raises a critical question: Is reliability worth a 10x price increase?

For mission-critical applications where mistakes carry significant costs, the answer might be yes. But for most use cases, developers will need to make careful calculations about the return on investment.

Breaking Down the Actual Costs

To put these prices in perspective, let’s consider some real-world scenarios:

A complex reasoning query with:

- 2,000 tokens of input (about 1,500 words)

- 1,000 tokens of output (about 750 words)

Cost breakdown:

- Input: 2,000 tokens × $150/million = $0.30

- Output: 1,000 tokens × $600/million = $0.60

- Total: $0.90 per query

For a service handling 10,000 such queries monthly, that’s $9,000—a substantial expense that would need to be justified by either:

- Premium pricing passed on to end users

- Significant competitive advantages

- Cost savings elsewhere in the business process

As one developer commented on OpenAI’s announcement, “That’s $30 per request. Hoping the free 1M tokens per day includes this.” (The complimentary token program almost certainly won’t cover o1-pro usage.)

The Strategic Access Threshold

OpenAI has limited o1-pro access to “select developers on tiers 1-5″—effectively those who have spent at least $5 on OpenAI API services. This relatively low financial barrier, paired with extraordinarily high usage costs, suggests a strategic approach:

- Make the model technically accessible to almost any serious developer

- Let usage costs naturally limit adoption to applications where the value justifies the expense

- Gather data on which use cases prove most valuable at this price point

This contrasts with previous models where access was more tightly controlled through waitlists and approval processes. The shift suggests OpenAI is testing price elasticity in the market while still maintaining some baseline quality control through the $5 minimum spend.

Technical Reality Behind “More Compute”

When OpenAI says o1-pro “uses more compute,” what does this actually mean for the model’s capabilities?

Unlike traditional software where more processing power directly correlates with speed, AI models operate differently. More compute for models like o1-pro likely means:

- Longer thinking chains with more internal reasoning steps

- More thorough exploration of potential solution paths

- Multiple internal verification passes to check work

- Higher precision in calculations and reasoning processes

This additional computation does not necessarily make the model “smarter” in terms of base capabilities, but rather more thorough and careful in applying its existing knowledge—similar to how a person might double-check their work on an important test.

The trade-off, besides cost, is likely speed. More computation means longer processing times, which could impact real-time applications.

Where o1-pro Makes Financial Sense

Despite the high price, certain applications could justify o1-pro’s cost:

Financial Analysis and Trading When AI assists in making investment decisions, even small improvements in accuracy can translate to significant returns. A system managing millions in assets could easily justify premium AI costs if it improves performance by even fractions of a percent.

Legal Contract Analysis Missing critical details in legal documents can result in massive financial or legal consequences. The improved reliability of o1-pro could prevent costly errors in high-stakes contract reviews.

Medical Decision Support While AI should never replace medical professionals, tools that help doctors analyze complex cases benefit from maximum reliability. The cost difference between o1 and o1-pro becomes trivial when patient outcomes are at stake.

Engineering Safety Systems For applications involving public safety or critical infrastructure, the highest possible reliability is essential, making o1-pro’s premium worthwhile.

The Price Competition Landscape

OpenAI’s premium pricing doesn’t exist in a vacuum. Several commenters on the announcement immediately compared o1-pro’s cost to alternatives:

“Sonnet Max at $1-2 makes more sense, or just copy and pasting to an OpenAI Pro account.”

This highlights a key market dynamic: users can and will seek out cost-effective alternatives when pricing exceeds perceived value. At current rates, many developers may opt for:

- Using Anthropic’s Claude models for complex reasoning tasks

- Deploying Mistral or Llama models for cost-sensitive applications

- Creating hybrid systems that use premium models only when necessary

- Manually transferring complex tasks to ChatGPT Pro ($20/month) rather than paying per-API-call

As one commenter noted: “I can’t wait to see the cost curve on this one plummet over time.” This expectation of falling prices reflects the broader trend in AI development, where cutting-edge capabilities typically become more affordable as the technology matures.

The Enterprise Calculation

For enterprise customers, the calculation extends beyond simple per-token costs. When considering o1-pro, businesses need to weigh factors like:

Integration Costs Switching between different AI providers carries significant engineering overhead. Companies already invested in the OpenAI ecosystem might find it more cost-effective to pay premium prices than to manage multiple vendors.

Compliance and Security OpenAI offers enterprise-grade security and data handling. For regulated industries, these protections may justify higher costs compared to alternatives with less robust compliance features.

Support and Reliability Enterprise SLAs and support agreements provide value beyond the raw AI capabilities. The “insurance policy” of working with an established provider factors into the total cost evaluation.

Internal Efficiency Gains If o1-pro helps knowledge workers complete tasks more accurately, the productivity improvements might far outweigh the increased AI costs.

Developer Strategies for Cost Management

For developers working with OpenAI’s new pricing structure, several strategies can help manage costs while still leveraging the capabilities of premium models:

Tiered AI Approach Use less expensive models for initial processing, then selectively upgrade to o1-pro only for the most critical reasoning tasks.

Prompt Engineering Optimization Careful prompt design can reduce token counts and increase response quality, potentially reducing the need for premium models.

Caching and Result Reuse For applications with repeated or similar queries, caching results can dramatically reduce API calls.

Local Processing Hybrid Use local models for basic tasks and reserve API calls for complex reasoning that truly requires o1-pro’s capabilities.

Future Pricing Trajectories

Historical patterns in technology pricing suggest that what’s premium today becomes standard tomorrow. Several forces will likely drive down the effective cost of AI reasoning capabilities:

Competition As other providers release competing reasoning models, price pressure will increase.

Efficiency Improvements Technical advancements typically allow the same capabilities to run on less computing power over time.

Scale Economics As adoption grows, fixed costs can be distributed across more users.

The premium pricing of o1-pro should be viewed as a snapshot of current market conditions rather than a permanent state. As one commenter noted, “Gonna revisit this in a year and remind myself how spoiled we are then.”

What This Means for AI Accessibility

The introduction of extremely high-priced AI tiers raises important questions about AI access and industry stratification:

Will premium AI create a two-tiered system where only well-funded companies can access the best tools?

Can smaller developers and startups remain competitive if they can’t afford premium AI capabilities?

How will this affect innovation if cutting-edge AI becomes a luxury good?

The answers aren’t simple, but these questions highlight the tension between commercial incentives and the broader benefits of accessible AI technology.

Making the Right Choice for Your Projects

When deciding whether o1-pro makes sense for your specific needs, consider:

Task Criticality How important is maximum reliability for your specific use case?

Budget Reality Can your pricing model support the additional costs, or would those resources better serve other aspects of your project?

Competitive Necessity Does your application category require best-in-class AI to remain competitive?

User Expectations Will your users notice and value the improvements that o1-pro provides?

For many applications, the standard o1 model or even GPT-4 alternatives will provide sufficient capabilities at more reasonable costs. Reserve premium options for tasks where the added reliability truly matters.

The Path Forward

OpenAI‘s o1-pro represents more than just a new model—it signals a maturing AI market where premium tiers with premium pricing become normal. As this trend continues, developers and businesses will need to become more sophisticated in how they select and deploy AI capabilities.

The good news is that even as high-end models push pricing boundaries, the overall cost of AI continues to fall when measured by capability per dollar. Today’s luxury features will be tomorrow’s standard offerings.

For now, approach o1-pro with clear eyes about its costs and benefits. Test it against alternatives. Measure the actual value it provides in your specific applications. And remember that in technology, what seems expensive today often becomes affordable sooner than we expect.

What AI capabilities would justify premium pricing in your projects? Think about where reliability and advanced reasoning would provide genuine value—and where simpler models might serve just as well.