Google has added Chirp 3, its high-definition voice model, to the Vertex AI platform. This move signals a major shift in generative AI as focus moves from text-based interfaces toward voice applications. While text generation has dominated AI developments so far, voice represents the next big frontier—and its wave is arriving quickly.

What Exactly Is Chirp 3?

Chirp 3 represents Google’s most advanced text-to-speech (TTS) technology. Built on large language models, it creates remarkably lifelike speech with natural emotional inflections and intonation patterns. The system now supports eight distinct voice personalities across 31 language locales, making it one of the most comprehensive voice AI systems available.

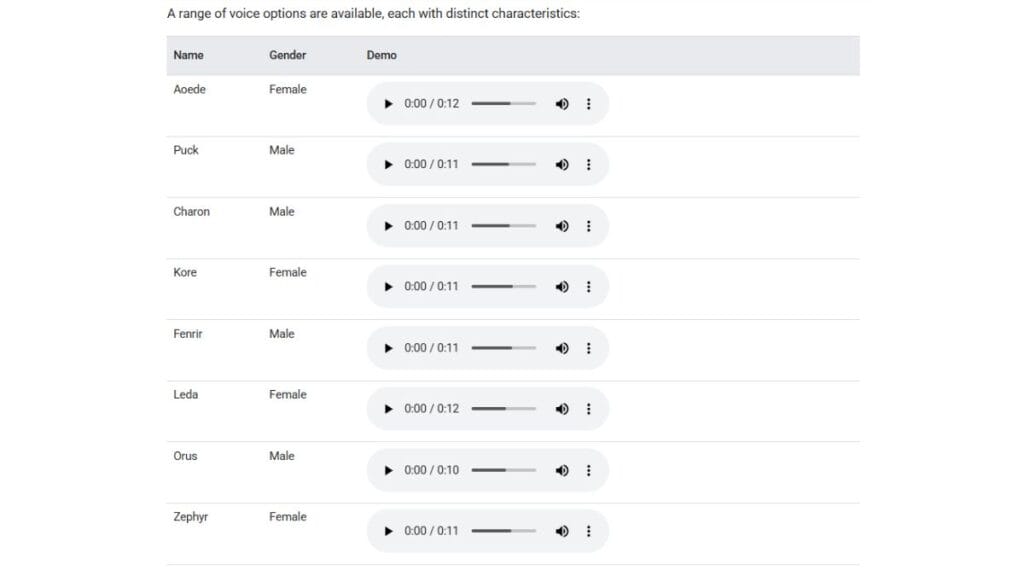

The voice options include four male voices (Puck, Charon, Fenrir, and Orus) and four female voices (Aoede, Kore, Leda, and Zephyr). Each voice has unique characteristics designed to suit different applications and contexts.

What sets Chirp 3 apart from previous voice models is its improved ability to handle pacing, flow, and emotional resonance—key elements that have traditionally made AI-generated speech sound robotic or unnatural.

Technical Implementation Details

For developers and technical professionals, implementing Chirp 3 involves choosing between two primary methods: streaming synthesis and batch synthesis.

Streaming synthesis works best for real-time applications where immediate response is critical. This method generates audio on-the-fly as text is fed into the system, making it ideal for conversational interfaces and voice assistants. The code implementation requires creating a request generator that yields text segments sequentially, with the system returning audio content as it’s produced.

Batch synthesis, on the other hand, processes complete text blocks at once, making it better suited for pre-recorded content like audiobooks or marketing materials. This approach allows for higher quality output since the system can analyze the entire text context before generating speech.

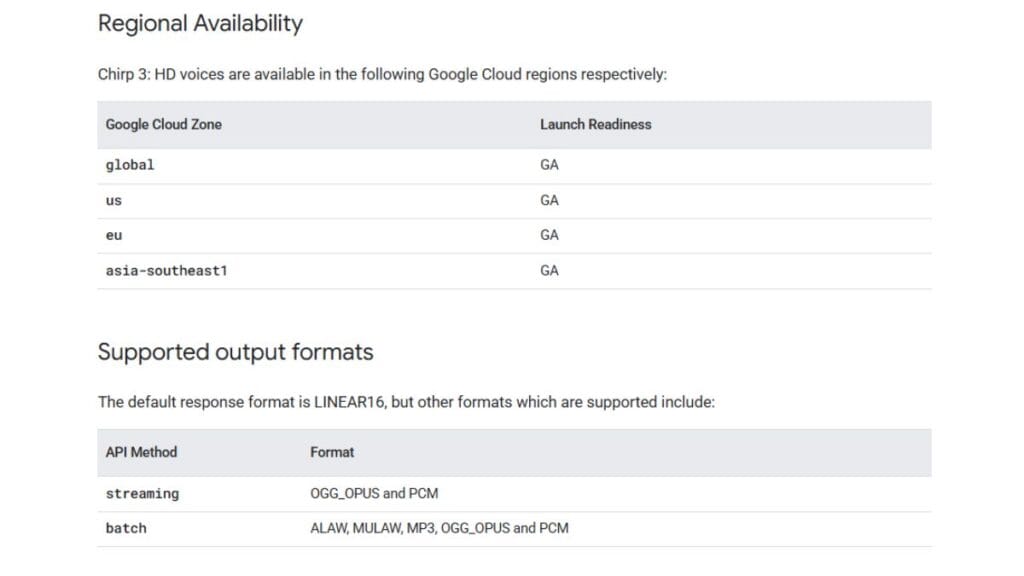

The speech output supports multiple audio formats. For streaming applications, OGG_OPUS and PCM formats are available. Batch processing supports additional formats including ALAW, MULAW, MP3, OGG_OPUS, and PCM. This flexibility allows developers to choose the right format based on their specific needs for quality, file size, and compatibility.

Regional Availability and Deployment Options

Google has made Chirp 3 available across multiple cloud regions, including global, US, EU, and asia-southeast1. This wide availability helps address data residency requirements and reduces latency for applications serving users in different geographic areas.

The service is accessible through Google Cloud’s standard APIs, with voice names following a consistent format: <locale>-<model>-<voice>. For example, to use the Kore voice for American English, developers would specify “en-US-Chirp3-HD-Kore” in their API calls.

Beyond Basic Implementation: The Art of Natural Speech Scripting

One aspect that mainstream coverage has largely missed is how crucial proper scripting is for effective voice AI implementation. Google’s documentation includes detailed guidance on creating scripts that sound natural rather than robotic.

The key to natural-sounding AI speech lies in understanding how humans actually talk. This means incorporating:

- Strategic pauses using punctuation like commas, ellipses, and hyphens

- Natural pacing variations throughout sentences and paragraphs

- Conversational elements like contractions and informal phrasing

- Context-appropriate tone adjustments

For example, instead of a robotic script like: “The product is now available. We have new features. It is very exciting.”

A more natural version would be: “The product is now available… and we’ve added some exciting new features. It’s, well, it’s very exciting.”

This attention to scripting detail can transform how users perceive and interact with voice applications. Organizations implementing Chirp 3 should consider creating dedicated content teams focused on voice script optimization rather than simply repurposing written content.

Key Use Cases and Implementation Strategies

While Google mentions standard use cases like voice assistants and audiobooks, several other applications show significant promise:

Healthcare Communication Systems

Healthcare providers can use Chirp 3 to create personalized patient communication systems that deliver information in a natural, reassuring manner. This could include medication reminders, post-surgery instructions, or check-in prompts. The system’s ability to speak multiple languages makes it especially valuable in diverse healthcare settings.

Implementation strategy: Start with non-critical communications where accuracy is less crucial, then gradually expand to more complex interactions as confidence in the system grows.

Enhanced Educational Content

Educational institutions can transform text-based materials into engaging audio content. This benefits students with different learning styles and those with visual impairments. The multiple voice options allow content creators to assign different voices to different characters or concepts, making complex material easier to understand.

Implementation strategy: Begin by converting supplementary materials, then collect student feedback before applying the technology to primary instructional content.

Multilingual Customer Service

Companies with global operations can implement consistent customer service experiences across language barriers. Chirp 3’s support for 31 language locales enables organizations to maintain brand voice across markets while speaking to customers in their preferred language.

Implementation strategy: Develop core scripts in a primary language, then use professional translators to adapt these scripts before implementing them in the voice system.

Accessible Marketing Content

Marketing teams can create audio versions of campaigns that maintain brand voice consistency while making content accessible to more audiences. This approach helps brands reach consumers who prefer audio content or have visual limitations.

Implementation strategy: Test voice-based marketing with small segments before wider rollout, and establish clear guidelines for maintaining brand voice in audio format.

Beyond Novelty: Measuring Voice AI Implementation Success

Organizations implementing Chirp 3 should establish clear metrics to measure success beyond the novelty factor. Key performance indicators might include:

- Completion rates for voice-guided processes

- User sentiment analysis comparing voice to text interactions

- Resolution rates for voice-based customer service

- Time savings for users engaging with voice versus text interfaces

- Accessibility improvements for users with different needs

These metrics help ensure that voice AI implementation delivers genuine value rather than simply following a technology trend.

Technical Limitations and Workarounds

While Chirp 3 represents significant progress, several technical limitations remain. Understanding these can help developers implement more effective solutions:

- Handling of specialized terminology varies across languages and domains

- Emotional nuance control remains limited compared to human voice actors

- Real-time context adaptation still presents challenges

Developers can address these limitations through:

- Custom pronunciation dictionaries for domain-specific terminology

- Script optimization focusing on supported emotional ranges

- Breaking complex interactions into smaller, more manageable segments

Safety Measures and Ethical Considerations

Organizations implementing voice AI should establish their own ethical guidelines covering:

- Clear disclosure when users are interacting with AI voices

- Limitations on impersonation of specific individuals

- Careful consideration of emotional manipulation through voice tone

- Protocols for handling sensitive or controversial content

Google’s Voice AI Strategy in Context

Google’s addition of Chirp 3 to Vertex AI places it alongside other generative AI tools including Gemini LLM models, the Imagen image generation system, and the Veo 2 video generation tool. This forms a comprehensive generative AI ecosystem within the Vertex platform.

This strategy positions Google differently from competitors like ElevenLabs and Sesame, which have focused exclusively on voice technology. While specialized companies may currently lead in specific aspects of voice realism, Google’s integration advantage could prove decisive for enterprise adoption.

DeepMind CEO Demis Hassabis frames this as a long-term development: “It’s going to change things… over the next decade, so the medium to longer term. It’s one of those interesting moments in time.”

What This Means For Organizations

For organizations evaluating voice AI implementation, several practical considerations emerge:

- Integration capabilities may outweigh absolute voice quality for many applications

- Platform consolidation (using Vertex AI for multiple AI needs) offers efficiency advantages

- Google’s long-term support provides implementation security compared to startups

- The rapid advancement pace means frequent reassessment of capabilities is essential

Organizations should approach Chirp 3 implementation as part of a broader voice AI strategy rather than a one-time technology deployment.

What’s Next For Voice AI?

The rapid advancement in voice AI suggests several developments on the horizon:

- Increasing personalization capabilities allowing unique voice creation

- Better handling of conversational elements like interruptions and overlapping speech

- More sophisticated emotion modeling beyond basic positive/negative tones

- Deeper integration with other AI systems for context-aware responses

For technical professionals working with voice AI, staying current with these developments through regular testing and exploration will be essential.

How To Get Started With Chirp 3

For those ready to implement Chirp 3, the process starts with Google Cloud access and basic familiarization with the text-to-speech API structure. Creating proof-of-concept implementations using the provided code samples offers an accessible entry point before moving to more complex applications.

The most successful implementations will likely come from teams that combine technical AI expertise with content creation experience, recognizing that both technology and scripting play critical roles in effective voice AI applications.

Voice represents AI’s next major frontier, and Google’s Chirp 3 offers organizations a mature platform to start exploring this space. Those who approach it with clear strategic goals and an understanding of both its capabilities and limitations will be best positioned to create voice applications that truly enhance user experiences.