Artificial intelligence (AI) avatars are digitally synthesized representations of individuals, brought to life through sophisticated AI algorithms. These advanced digital entities possess the capability to emulate human behaviors, exhibit a range of expressions, and even generate synthetic speech, blurring the lines between the real and the virtual.

In contrast to their predecessors, which were often static images or simple animations, AI avatars achieve a heightened level of realism and interactivity, enabling more engaging online experiences. The continuous refinement of these technologies is leading towards increasingly photorealistic avatars capable of real-time interactions, thereby creating a growing demand for effective methods to discern them from genuine video content.

Concurrently, there has been a significant surge in the development and accessibility of AI video generation technologies and platforms. Modern AI tools empower users to effortlessly create videos using simple text prompts, uploaded images, or even by manipulating existing footage.

This ease of creation has unlocked numerous beneficial applications across various sectors, including content creation and education. However, this powerful technology also presents opportunities for malicious exploitation, such as the dissemination of disinformation and the perpetration of scams.

The inherent dual-use nature of AI video generation underscores the critical importance of establishing robust detection mechanisms to proactively address and mitigate potential harms.

In response to these growing concerns, there is an increasing demand for tools and websites capable of accurately identifying AI-generated videos, particularly those featuring AI avatars. The ability to reliably distinguish between authentic and synthetic video content is paramount in combating the spread of misinformation, preventing fraudulent activities, and safeguarding against other malicious uses of this technology.

As AI technology continues its rapid advancement, the subtle cues that once allowed human observers to differentiate between real and fake videos are becoming increasingly imperceptible. This necessitates a shift towards automated detection solutions that can analyze the intricate details of video content with a precision that surpasses human capabilities.

Fundamentals of AI Video Detection

At its core, AI video detection relies on the principle of scrutinizing various facets of a video to pinpoint anomalies or artifacts that serve as indicators of AI generation. This process typically involves the application of sophisticated machine learning (ML) and deep learning (DL) algorithms.

These algorithms are trained on extensive datasets comprising both authentic and AI-generated videos, enabling them to learn the subtle distinctions between the two. The effectiveness of these detection tools is intrinsically linked to the quality and diversity of the data used during their training.

A comprehensive and varied training dataset ensures that the AI models can recognize a broader range of AI-generated content and minimize the occurrence of both false positives and false negatives.

Several key technologies underpin the functionality of AI video detection tools. Machine learning (ML) algorithms play a crucial role in identifying patterns and features that differentiate real videos from those generated by AI. These algorithms can employ both supervised learning, where models are trained on labeled data to recognize specific characteristics, and unsupervised learning, which is useful for detecting anomalies without prior labeling.

Deep learning (DL), a subfield of ML, utilizes deep neural networks, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), to analyze video content. CNNs are particularly effective at analyzing individual video frames to detect spatial inconsistencies or artifacts.

RNNs and their variant, Long Short-Term Memory (LSTM) networks, excel at examining the temporal aspects of video, identifying unnatural motion patterns or inconsistencies that develop across successive frames.

Computer vision techniques are also integral to AI video detection, enabling tasks such as object recognition, facial analysis, and the tracking of movements within a video. These techniques can involve identifying objects within the video, recognizing activities or actions, and segmenting the video into distinct scenes.

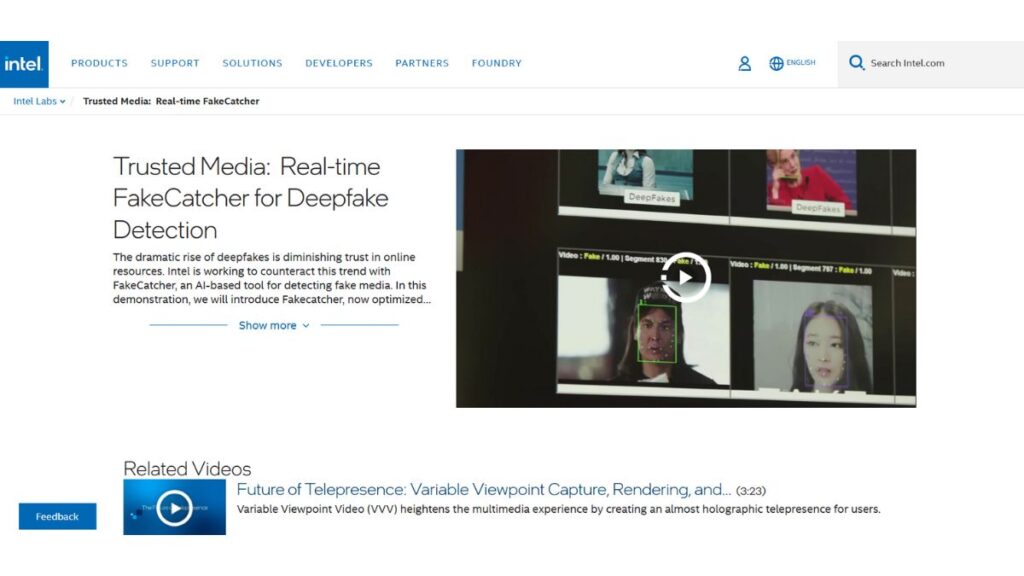

Furthermore, some advanced tools incorporate biometric analysis. For instance, Intel’s FakeCatcher employs Photoplethysmography (PPG), a technique that detects subtle changes in blood flow from video pixels, to assess the authenticity of a video by analyzing underlying biological signals.

This multi-faceted approach, which integrates visual, temporal, and in some cases, biometric analysis, enhances the robustness and accuracy of AI video detection, as different AI generation methods may leave distinct and detectable traces across these various data modalities.

Available Tools and Platforms for AI Video Detection

A growing number of AI-powered tools and platforms are emerging to address the need for reliable AI video detection. These tools vary in their primary focus, ranging from general AI content detection to specialized deepfake identification, and offer a range of functionalities, including real-time analysis capabilities and API access for integration into other systems.

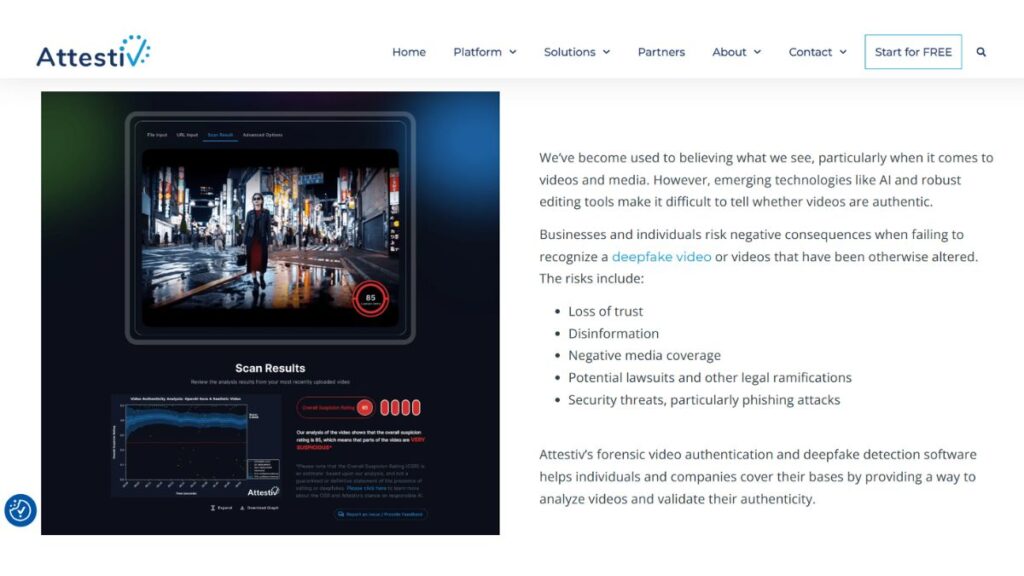

Several tools stand out for their reported capabilities in detecting AI-generated video. Attestiv Deepfake Video Detection Software utilizes patented AI and machine learning technology to identify evidence of tampering or synthetic elements within video files.

Their process involves a straightforward three-step approach: users upload a video via a web app or API, the system analyzes the content for tampering, and a report detailing the findings is generated. Attestiv provides an “Overall Suspicion Rating” and can also apply unique digital signatures, or fingerprints, to videos to track their provenance.

The platform has demonstrated a minimum accuracy of 97% as measured by the area under the ROC curve (AUC) . The emphasis on forensic analysis and the ability to track video provenance suggest a strong focus on providing verifiable evidence of manipulation.

Sensity AI offers a comprehensive deepfake detection platform capable of analyzing videos, images, audio, and even AI-generated text. This platform employs advanced AI and deep learning technology to detect AI alterations across various media types and provides real-time monitoring of over 9,000 sources to track malicious deepfake activity.

Sensity AI reports an accuracy rate of 98%, outperforming traditional non-AI forensic tools. The platform’s broad capabilities across multiple media formats highlight the interconnected nature of AI-generated disinformation and the need for holistic detection solutions.

ScreenApp AI Video Detector claims to offer advanced detection technology with an accuracy exceeding 99% for identifying AI-generated videos. The system analyzes multiple parameters, including visual consistency, motion patterns, digital artifacts, and metadata signatures, and can provide frame-by-frame analysis when needed.

ScreenApp supports all major video formats and can detect both fully AI-generated videos and those with AI-modified segments . The high claimed accuracy and detailed analysis suggest a focus on identifying even subtle indicators of AI manipulation.

DeepBrain AI offers technology for detecting deepfake videos and voices through pixel-by-pixel analysis of images and videos, and comprehensive audio analysis considering factors like time, frequency, and noise. The detection process typically takes 5 to 10 minutes, and the platform provides technical information about identified deepfakes, such as modulation rate and synthesis type.

DeepBrain AI reports an increased detection rate due to continuous training on a large dataset. The provision of technical details can be particularly valuable for forensic investigations, offering insights into the methods used to create the manipulated content.

Intel’s FakeCatcher distinguishes itself as the first real-time deepfake detector that analyzes biological signals to determine video authenticity. Unlike many AI-based detectors that focus on visual or auditory inconsistencies, FakeCatcher uses Photoplethysmography (PPG) to detect subtle changes in blood flow from video pixels.

Intel claims its model achieves a 96% accuracy rate under controlled conditions and 91% accuracy when tested on “wild” deepfake videos. This approach of leveraging human biological traits represents a significant advancement in deepfake detection, potentially offering a more resilient method against increasingly realistic AI-generated content.

Hive AI has developed a Deepfake Detection API designed to identify AI-generated content across both images and videos. This API detects faces within the media and applies a classification system to label each face as either “yes_deepfake” or “no_deepfake” with a confidence score.

Trained on a diverse dataset of synthetic and real videos, Hive’s technology is integrated into content moderation systems for digital platforms. The API focus of Hive AI suggests its suitability for seamless integration into existing platforms and automated content moderation workflows.

Other notable tools in the AI video detection landscape include OpenAI’s Deepfake Detector, which has demonstrated a 98.8% accuracy rate in detecting images produced by their DALL-E 3 model. Additionally, platforms like Reality Defender, Pindrop Security, Cloudflare Bot Management, AI Voice Detector, DeepDetector, Microsoft Video Authenticator, and Deepware offer various capabilities in identifying manipulated media .

To provide a consolidated overview, the following table summarizes the key features and reported accuracy of some of the aforementioned AI video detection tools:

Table 1: Key Features and Reported Accuracy of AI Video Detection Tools

| Tool Name | Primary Detection Method | Media Types Supported | Claimed Accuracy | Key Features | Free Trial/Plan Available |

| Attestiv Deepfake Video Detection Software | AI and ML analysis of video frames | Video | ≥ 97% AUC | Forensic analysis, provenance tracking, Overall Suspicion Rating, digital fingerprinting | Free and paid plans |

| Sensity AI | Advanced AI and deep learning | Video, Image, Audio, Text | 95-98% | Multimodal detection, real-time monitoring, KYC & identity verification | Contact for details |

| ScreenApp AI Video Detector | Analysis of visual consistency, motion patterns, digital artifacts, metadata | Video | > 99% | Frame-by-frame analysis, supports various video formats | Free tier available |

| DeepBrain AI | Pixel-by-pixel image/video analysis, audio analysis | Video, Image, Audio | Not specified (detection rate increased through training) | Technical information provided on detected deepfakes | Starts from $24/month |

| Intel’s FakeCatcher | Analysis of biological signals (blood flow using PPG) | Video | 96% (controlled), 91% (wild) | Real-time detection | Not publicly available |

| Hive AI’s Deepfake Detection API | Face detection and classification | Image, Video | High accuracy | API access for integration, content moderation focused | Contact for details |

| OpenAI’s Deepfake Detector | Not specified | Image (DALL-E 3) | 98.8% (DALL-E 3 images) | Specific to OpenAI’s DALL-E 3 images | Part of ChatGPT Plus |

This table offers a comparative snapshot of leading AI video detection tools, allowing for a quick assessment of their capabilities and reported performance. It is important to note that claimed accuracy rates may vary depending on the specific testing conditions and the types of AI-generated content analyzed.

Techniques for Identifying AI Avatar Videos

While general AI video detection techniques are applicable to videos featuring AI avatars, there are specific characteristics and methods that can be particularly useful in identifying these digitally created representations.

AI avatar videos may exhibit certain visual artifacts or inconsistencies that are more pronounced due to the complexities of rendering realistic human-like figures. For instance, facial features might appear unnaturally smooth or lack fine details in skin texture.

Inconsistencies in the appearance of eyes and teeth, such as unusual reflections or unnatural shapes, can also be telltale signs. The blinking behavior of an AI avatar might appear either absent or too frequent and regular, deviating from natural human patterns.

Asymmetry or disproportionate facial features, particularly in less sophisticated AI models, can also indicate artificial generation. Rendering challenges can also manifest as issues with glasses, such as inconsistent glare, or unnatural depictions of facial hair.

Beyond facial features, the body language and movement of AI avatars can reveal their synthetic nature. Movements might appear stiff, jerky, or lack the subtle nuances of natural human motion. The absence of subtle micro-expressions, which are integral to conveying genuine emotions, can also be a clue.

Furthermore, inconsistencies in how the avatar interacts with its environment, such as a lack of proper physical reactions to objects, can suggest AI generation.

Audio discrepancies can also be indicative of an AI avatar. The synthesized voice might sound unnatural, with unusual pauses, intonation patterns, or a lack of natural breathing sounds. Lip synchronization issues, where the movement of the avatar’s lips does not perfectly align with the spoken audio, are another common artifact.

Additionally, background sounds that seem incongruous with the visual context can raise suspicion.

Anomalies in the background and environment of the video can also point to AI generation. This can include inconsistencies in shadows, lighting, or reflections that do not behave as they would in the real world.

Objects within the scene might exhibit unusual behavior or defy the laws of physics, such as appearing to pass through solid objects . Unnaturally blurred or textured backgrounds can also be a characteristic of some AI-generated videos.

Detecting AI avatar videos effectively often involves a combination of automated tools and careful manual observation. The AI video detection tools discussed in Section 3 can be employed to analyze videos for the types of anomalies mentioned above. These tools are designed to identify inconsistencies in visual and sometimes auditory cues that might escape human detection.

Manual observation remains a crucial aspect of identifying AI avatar videos. Individuals should be trained to closely examine facial details, paying attention to skin texture, eye and teeth appearance, and blinking patterns.

Scrutinizing body language for stiffness or unnatural movements, and evaluating audio quality for synthetic sounds or lip-sync issues are also important steps. Observers should also be vigilant for inconsistencies in the background and any behaviors that defy the laws of physics.

The “uncanny valley” effect, a feeling of unease or eeriness when encountering something that appears almost human but not quite, can also serve as an intuitive indicator that a video might feature an AI.

Reverse image and video search engines can be valuable tools for identifying the origin of a video and determining if it has been manipulated or if similar content exists elsewhere online. This can help to establish the authenticity and context of the video.

In cases where information about the video’s origin or the prompt used to generate it is available, comparing the video with results from text-to-image or video generators using similar prompts can provide further clues. While metadata associated with a video might potentially offer insights into its origin, its reliability in identifying AI-generated content can be limited.

The most effective strategy for identifying AI avatar videos likely involves a synergistic approach that combines the speed and analytical power of automated tools with the nuanced observation and critical thinking of human analysis.

Challenges and Limitations in Detecting AI Avatar Videos

Detecting AI avatar videos presents a complex and evolving challenge, primarily due to the rapid advancements in AI generation technology. The field has witnessed a significant shift from older AI models like Generative Adversarial Networks (GANs) to more sophisticated diffusion models.

Diffusion models excel at creating highly realistic and coherent videos by gradually transforming random noise into clear, lifelike visuals, refining each frame while ensuring smooth transitions. This increasing sophistication poses a significant hurdle for detection tools, necessitating continuous research and development of more advanced techniques to keep pace with these evolving capabilities.

Current AI video detection tools, while increasingly powerful, are not without their limitations. Accuracy remains a key concern, with the potential for both false positives, where genuine videos are incorrectly flagged as AI-generated, and false negatives, where AI-generated videos evade detection.

Many tools face challenges in generalizing their detection capabilities across different AI generation methods and models. A tool trained on videos generated by one type of AI might not perform effectively on content created using a different approach.

Furthermore, some of the more advanced detection methods can be computationally intensive, requiring significant processing power and time for analysis. Malicious actors may also develop techniques specifically designed to evade detection by exploiting weaknesses in current algorithms.

Many existing tools are primarily focused on detecting deepfakes involving facial manipulation, and their effectiveness in identifying fully synthetic AI avatars might be less pronounced. Finally, access to the most cutting-edge detection tools might be limited, or they may come with substantial costs.

Therefore, it is crucial to recognize that current AI video detection tools are not infallible, and their accuracy can fluctuate depending on the sophistication of the AI-generated content and the specific tool being used.

Detecting AI avatars specifically introduces its own set of challenges. The increasing realism of these digital representations means that subtle anomalies, which might have been more apparent in earlier iterations, are becoming increasingly difficult to discern.

The wide variety of avatar styles and rendering qualities employed by different AI generation platforms can further complicate detection efforts, as tools need to be robust enough to identify synthetic elements across this spectrum.

Additionally, the potential for AI avatars to be seamlessly integrated into otherwise authentic video footage adds another layer of complexity, requiring detection methods that can identify inconsistencies within specific elements of a video rather than just the entire content.

Addressing these challenges likely requires the development of specialized algorithms specifically trained to identify the unique characteristics and potential flaws inherent in AI-generated avatars.

Research and Development in AI Video Detection

The field of AI video detection is undergoing active research and development, with numerous efforts focused on creating more effective and robust detection techniques. Emerging techniques include analyzing diffusion reconstruction errors (DIRE) to identify videos generated by diffusion models, which have become increasingly prevalent.

This method measures the difference between an input video frame and its reconstructed version by a pre-trained diffusion model. Research also explores spatio-temporal anomaly learning, utilizing convolutional neural networks (CNNs) to capture subtle forensic traces left during the AI generation process.

These CNNs are designed to identify anomalies in both the spatial characteristics of individual frames and the temporal flow of motion across frames. Other approaches involve techniques like facial warping artifact identification, often combined with deep learning models, to detect subtle distortions introduced during the creation of deepfakes.

Furthermore, research continues in specialized areas such as audio deepfake detection and multimodal forgery detection, recognizing that manipulation can occur across different media modalities. The ongoing exploration of these novel methods indicates a strong commitment within the research community to enhance the capabilities of AI video detection.

The progress in AI video detection is also supported by the creation of large-scale datasets and benchmarks. Datasets like the Generated Video Dataset (GVD) and the DeepFake Detection Challenge Dataset (DFDC) provide valuable resources for training and evaluating detection models, allowing researchers to compare the performance of different approaches under standardized conditions.

These resources are crucial for driving advancements in the field by providing a common ground for experimentation and progress assessment.

Addressing the challenges posed by AI-generated content requires collaborative efforts across academia, industry, and government organizations. Recognizing the potential for misuse, there is a growing emphasis on proactive measures and the development of advanced detection and prevention methods.

Despite these concerted efforts, the increasing realism and sophistication of AI-generated videos continue to present significant hurdles, highlighting the need for sustained and innovative research in this critical area.

Recommendations

The landscape of AI video detection is rapidly evolving in response to the increasing prevalence and sophistication of AI-generated content, including videos featuring AI avatars. While a variety of tools and platforms are now available, leveraging techniques from machine learning, deep learning, computer vision, and even biometric analysis, the challenge of reliably distinguishing between authentic and synthetic videos remains significant.

For individuals and organizations seeking to identify AI avatar videos, a multi-faceted approach is recommended. Combining the use of automated detection tools with careful manual observation can yield more accurate results.

It is crucial to approach online videos with a critical and skeptical mindset, particularly those that evoke strong emotions or present sensational claims. Close examination of visual details, such as facial features, body language, and environmental consistency, as well as scrutiny of audio quality for synthetic characteristics, should be part of the verification process.

Utilizing reverse image and video search engines can help trace the origin of content and identify potential manipulations. Staying informed about the latest advancements in both AI video generation and detection techniques is also essential.

Users must recognize that current detection tools are not foolproof and can produce errors. When encountering suspicious content, reporting it to the relevant platform or authorities can contribute to broader efforts in combating misinformation.

Looking ahead, advancements in AI video detection are expected to continue as research yields new techniques and refines existing ones. However, the ongoing evolution of AI technology necessitates continuous vigilance and adaptation. The ability to effectively detect AI avatar videos and other forms of synthetic media will remain a critical factor in maintaining trust and integrity in the digital information landscape.