The AI agent market just shifted dramatically, and most businesses are making expensive mistakes by focusing on features instead of ROI. Recent performance testing of three major AI agents reveals critical insights that directly impact your operational costs and deployment timeline.

The Real Cost of AI Agent Performance Differences

Speed matters more than most business leaders realize. When GenSpark completes a landing page in minutes while Manus takes 15 minutes for the same task, you’re not just talking about convenience. You’re looking at a 400% productivity difference that compounds across every project.

Consider the math: If your team runs 20 AI agent tasks daily, switching from a slow agent to a fast one saves 3-4 hours of waiting time. That’s half a workday returned to productive activities. For a team billing $150 per hour, that’s $600 daily in recovered productivity.

The testing data shows GenSpark consistently delivered deployment-ready outputs while competitors required additional iterations. This completion rate difference creates hidden costs most businesses overlook during vendor selection.

Deployment Readiness Creates Hidden Budget Impact

Many AI agents create impressive demos that fail real-world deployment standards. The comparison testing revealed stark differences in output quality that directly affect implementation budgets.

GenSpark produced landing pages with working buttons, proper responsive design, and accurate content extraction. Perplexity Labs generated visually appealing pages with broken call-to-action buttons and unreadable text sections. Manus created decent designs but used placeholder images instead of relevant visuals.

These quality gaps force additional development cycles. A broken CTA button means hiring developers to fix code. Poor responsive design requires design iteration. Each fix burns budget and delays launch dates.

Smart businesses calculate total cost of ownership, including post-generation fixes. A tool that costs more upfront but delivers deployment-ready outputs often provides better ROI than cheaper alternatives requiring extensive refinement.

Speed vs Quality Matrix for Business Applications

Different business scenarios demand different performance priorities. The testing results create a clear decision framework for matching tools to use cases.

For rapid prototyping and client presentations, speed takes priority. GenSpark’s ability to create professional-looking landing pages in minutes makes it ideal for agencies pitching concepts or startups testing market response. The output quality exceeds presentation thresholds while maintaining development velocity.

For analytical dashboards and data visualization, quality and interactivity matter more than speed. Perplexity Labs excelled at creating interactive reports with clickable elements and visual analytics. The extra development time pays off when presenting complex data to stakeholders or clients.

For comprehensive research projects requiring detailed documentation, Manus provides thorough analysis despite slower processing. The PDF reports include extensive research and professional formatting suitable for formal business presentations.

Resource Allocation Strategy Based on Performance Data

The performance differences suggest specific resource allocation strategies for different business types.

Small businesses and startups should prioritize speed and deployment readiness. Limited budgets and tight timelines make GenSpark’s rapid output generation valuable. The ability to create functional landing pages or basic applications quickly supports lean development approaches.

Enterprise teams handling complex analytics should invest in Perplexity Labs for data-heavy projects. The interactive dashboard capabilities and research depth justify longer processing times when dealing with high-stakes business intelligence or client reporting.

Marketing agencies managing multiple client projects benefit from maintaining access to multiple tools. GenSpark handles rapid campaign asset creation, while Perplexity Labs manages analytical reports and research briefs.

Integration Planning for Multi-Agent Workflows

The performance data suggests combining multiple AI agents creates optimal workflows rather than relying on single-tool solutions.

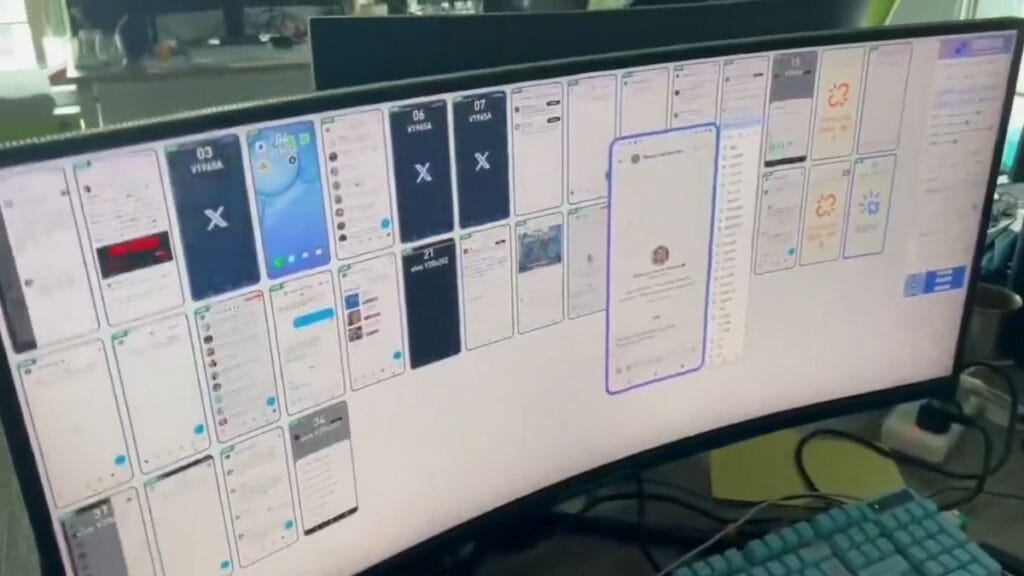

Forward-thinking teams are developing hybrid approaches. GenSpark handles initial rapid prototyping and client-facing assets. Perplexity Labs manages data analysis and interactive reporting. Manus provides detailed research documentation for complex projects.

This multi-agent strategy requires workflow planning and team training. Staff need clear guidelines for tool selection based on project requirements, deadlines, and quality standards.

Budget allocation should reflect usage patterns. High-volume rapid prototyping justifies GenSpark investment. Regular analytical reporting supports Perplexity Labs subscription. Occasional deep research projects make Manus cost-effective on per-project basis.

Market Position Assessment and Competitive Implications

The rapid advancement in AI agent capabilities creates competitive pressure across industries. Businesses using outdated tools or manual processes face increasing disadvantage against competitors leveraging high-performance AI agents.

The testing revealed how quickly market positions shift. Manus dominated months ago but now trails in speed and output quality. Perplexity Labs emerged rapidly with competitive capabilities. GenSpark established leadership in deployment-ready output generation.

This volatility demands flexible vendor strategies. Long-term exclusive contracts with single AI agent providers create risk exposure. Portfolio approaches with multiple tools provide better adaptation to market changes.

Implementation Timeline and Change Management

Rolling out AI agents requires careful change management planning. The performance differences affect training requirements and adoption timelines.

GenSpark’s intuitive interface and rapid output generation support faster team adoption. New users see immediate results, building confidence and usage momentum. The learning curve remains manageable for non-technical staff.

Perplexity Labs requires more training for effective utilization. The dashboard creation and analytical features need explanation and practice. Teams should plan extended onboarding periods and provide comprehensive training resources.

Manus demands patience during adoption. The slower processing speed and research-heavy output require workflow adjustments. Teams accustomed to rapid iteration may find the pace frustrating initially.

Strategic Planning for AI Agent Evolution

The rapid pace of AI agent development requires strategic planning for continuous technology adoption. Today’s performance leaders may face challenges from tomorrow’s emerging tools.

Businesses should establish evaluation frameworks for assessing new AI agents as they emerge. Regular testing cycles help identify performance improvements and new capabilities worth adopting.

Budget planning should include technology refresh cycles. AI agent capabilities advance quickly enough to justify annual or bi-annual tool evaluation and potential switching.

Taking Action on Performance Insights

Start by auditing your current AI agent usage patterns. Identify tasks taking excessive time or requiring extensive post-generation fixes. Calculate the actual cost of these inefficiencies across your team’s weekly workflow.

Test the agents discussed here with your specific use cases. The performance differences vary significantly based on task type and quality requirements. What works for landing page creation may not work for data analysis projects.

Develop clear selection criteria for different project types. Create internal guidelines helping team members choose appropriate tools based on deadlines, quality standards, and output requirements. This framework prevents costly tool mismatches and improves overall productivity.