When building AI agents that need web data, most developers hit the same wall: rate limits, CAPTCHAs, JavaScript blocks, and outright bans. These roadblocks stop agents from getting the data they need, making real-time web access nearly impossible.

But what if your AI agents could browse the web like humans, getting past these blocks while handling complex tasks? This is where Model Context Protocol (MCP) servers come in, changing how AI agents interact with the web.

What Are MCP Servers?

MCP stands for Model Context Protocol, a system that lets AI agents talk smoothly with tools and other resources. In 2025, many companies now build MCP servers that both developers and AI users can use.

Bright Data’s MCP server stands out in this field. It’s not just a basic proxy or scraper but gives AI agents full web access. The tools can control a web browser and perform complex tasks that most scrapers can’t handle.

How MCP Servers Work With AI

When paired with tools like Claude or custom AI agents, MCP servers unlock true web browsing skills. Your agent can:

- Get past rate limits and CAPTCHAs

- Handle JavaScript on complex sites

- Deal with pop-ups and blocks in real time

- Click buttons and fill forms

- Browse sites that block most bots (like Reddit)

- Scale to hundreds of browser tasks at once

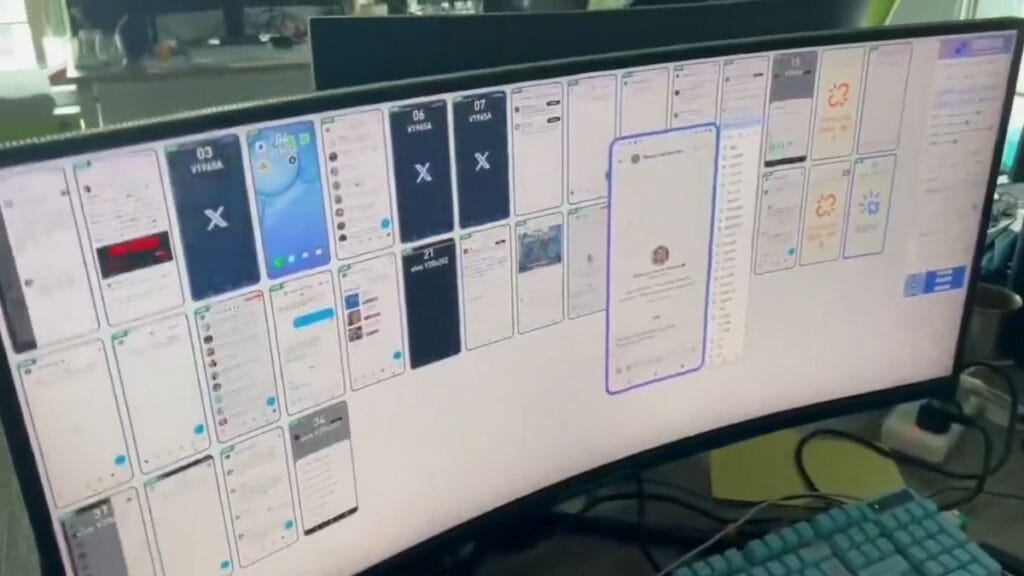

The big step forward is that these tools can create a remote browser that works like a human user. Your AI agent can see what’s on the page, click buttons, fill forms, and react to what happens – just like you would.

MCP Tools in Action

The best way to see MCP power is through examples. When asked to search Amazon for noise-canceling headphones, an AI agent using MCP tools can:

- Search Amazon for products

- Find and click on top listings

- Extract key details from each product page

- Read and analyze customer reviews

- Make sense of pricing data

- Return a ranked list based on all this info

For sites that block most bots, like Reddit, the MCP server shines even more. An AI agent can search Reddit posts, extract comments, analyze sentiment, and pull useful data that would be nearly impossible to get otherwise.

Setting Up MCP With Claude Desktop

To use MCP servers with Claude Desktop, you need a few things:

- Claude Desktop installed on your computer

- Node.js installed

- A Bright Data account with API access

- Two Bright Data tools: Web Unlocker API and Browser API

Once you have these ready, you add the MCP server configuration to Claude Desktop by editing its config file. This connects Claude to the MCP server and gives it access to all the web tools.

After restarting Claude Desktop, you’ll see new tools appear that let you scrape websites, control browsers, click elements, and more – all from within your normal Claude conversations.

Building Custom AI Agents With MCP

For developers who want more control, you can build custom AI agents using Python that connect to MCP servers. This works with tools like LangGraph and the LangChain MCP adapters.

The basic steps are:

- Set up your Python project with the needed packages

- Create environment variables for your API keys

- Set up a connection to the MCP server

- Build an AI agent that can use these tools

This setup lets you create agents that can access the web with the same tools Claude Desktop uses, but with custom logic and task handling.

When To Use MCP-Powered Web Scraping

MCP servers work best for tasks that need:

- Access to sites that block most scrapers

- User-like web browsing with clicks and form fills

- Handling of complex sites with JavaScript

- Scale across many sites at once

- Real-time data from the web

Some great uses include price tracking, market research, social media analysis, job hunting, and news gathering.

The Limits Of Traditional Web Scraping

Most web scraping falls short when sites use:

- Advanced bot detection

- Required JavaScript

- Complex user flows

- CAPTCHAs

- IP-based blocks

MCP servers get around these limits by using real browsers with human-like behavior, rotating IPs, and solving CAPTCHAs automatically.

Looking Forward

As AI agents become more common, access to web data will only grow more important. MCP servers bridge the gap between AI agents and the web, giving them the ability to browse like humans.

For developers building AI systems that need real-world data, MCP servers offer a way past the roadblocks that have limited AI web access until now.

The rise of MCP servers points to a future where AI agents can freely access, process, and act on web data – making them much more useful for real-world tasks that need current information.

Try adding MCP tools to your AI agents and watch as they start to browse the web with ease, bringing back data that was out of reach before.