When OpenAI released their new o3 and o4 Mini models, the tech world erupted with a bold claim: AGI might finally be here. Sam Altman shared tweets suggesting “genius level” capabilities, an OpenAI model trainer admitted being “tempted to call this model AGI,” and economist Tyler Cowen stated bluntly, “I think it is AGI, seriously.” These claims deserve a closer look. What exactly do these models do, and do they really represent the arrival of Artificial General Intelligence?

The AGI Claims

The release of O3 and O4 Mini sparked immediate reactions from AI researchers and industry observers. John Hullman, a model trainer at OpenAI, wrote: “When O3 finished training and we got to try it out, I felt for the first time that I was tempted to call a model AGI. Still not perfect, but this model will beat me, you, and 99% of humans on 99% of intelligent assessments.”

Tyler Cohen went even further, tweeting: “I think it is AGI, seriously. Try asking it lots of questions and then ask yourself just how much smarter was I expecting AGI to be?” He added that AGI is “not much of a social event per se” and that “it still will take us a long time to use it properly.”

These statements from credible sources set off a wave of discussions about whether we’ve crossed an important threshold in AI development.

What Makes These Models Different?

To understand why these claims emerged, we need to look at what sets o3 and o4 Mini apart from previous AI systems:

Advanced Reasoning Capabilities

o3 is described as “the most powerful reasoning model” that pushes boundaries in coding, math, science, and visual perception. It has set new benchmarks in real-world coding scenarios and achieved nearly perfect scores on certain mathematical tests.

o4 Mini, though smaller and optimized for cost-efficiency, still performs remarkably well across multiple domains, especially in math where it achieved a 99.5% score on the AMY 2024 and 2025 math competition benchmarks.

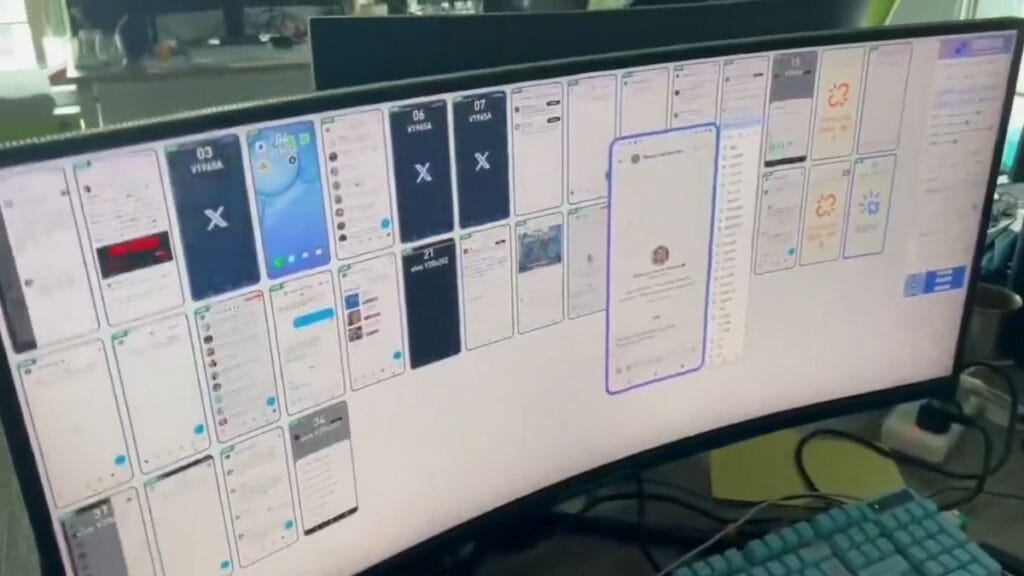

Thinking With Images

Perhaps the most striking feature is what OpenAI calls “thinking with images.” Unlike previous models that simply processed images as inputs, these new models can:

- Integrate images directly into their chain of thought

- Zoom in on specific areas of an image

- Manipulate images (rotating, zooming, transforming) as part of their reasoning

- Process blurry or low-quality images effectively

- Combine visual information with knowledge from across the internet

This ability to reason with visual information approaches how humans process visual data, combining what we see with what we know to draw conclusions.

Multi-Domain Excellence

A key feature of AGI is the ability to perform well across multiple domains without specialized training for each task. Both o3 and o4 Mini show remarkable performance across various benchmarks:

- Near-perfect scores on mathematical benchmarks

- Top performance on coding tasks that simulate real-world programming needs

- Strong results on visual reasoning tests

- Superior performance on general knowledge benchmarks

This broad capability across domains is precisely what many definitions of AGI require.

Is This Really AGI?

The question of whether these models constitute AGI depends largely on how we define the term. If AGI means “a system that can beat the average person on a range of different intelligence tests,” as some suggest, then there’s a strong case that o3 approaches this definition.

However, several important limitations persist:

Tool Use and Reliability

One critical limitation is what researchers call the “hallucination problem.” According to the model card, o3 actually hallucinates (makes confident but false assertions) about twice as often as the previous o1 model.

This creates what we might call a “hallucination paradox”: as models become better at reasoning, they may become more confidently wrong in certain scenarios. The suggested reason is that “outcome-based optimization incentivizes confident guessing.”

This unreliability means that while these models can theoretically solve many problems, users can’t fully trust them for critical tasks without verification.

Mathematical Limitations

While the 99.5% score on math benchmarks led some to claim that “AI has solved math,” OpenAI researcher Noam Brown offered an important clarification: “We did not solve math. For example, our model is still not great at writing mathematical proofs. o3 and o4 Mini are nowhere near close to getting International Mathematical Olympiad gold medals.”

This distinction matters because true mathematical intelligence includes the ability to create novel proofs and solve previously unsolved problems—capabilities these models haven’t demonstrated.

Self-Direction and Agency

True AGI would likely possess a level of self-direction and agency that current models don’t have. While o3 and o4 Mini can solve problems when prompted, they lack the ability to set their own goals, recognize when they need more information, or operate autonomously in the world.

The “Light at the End of the Tunnel”

John Hullman’s full quote about o3 included an interesting phrase: “one can start to see the light at the end of the tunnel.” This suggests that while o3 might not be complete AGI, it represents a significant milestone on the path to developing such systems.

The capabilities demonstrated by these models—particularly their ability to reason across multiple domains and integrate visual information into their thinking—represent substantial progress toward systems that can match or exceed human performance on a wide range of intellectual tasks.

The Location Detection Phenomenon

An unexpected capability that emerged during early testing provides another data point in the AGI debate. Users discovered that when shown images with minimal visual information, the models could often accurately determine geographic locations. This ability to combine visual processing with reasoning and background knowledge to make surprisingly accurate determinations resembles human intelligence in meaningful ways.

What’s Holding These Models Back from Being True AGI?

If we accept that o3 and o4 Mini don’t quite reach the threshold of AGI, what’s missing?

Reliability and Trust

The hallucination issue means these systems can’t be fully trusted for many tasks. As one observer noted, “most people right now trust AI to write an email or conduct a decent report, but you’re not going to really trust an AI to book your doctor’s appointment or to use your credit card because on the 1% or 3% chance it messes up, those consequences are just too severe.”

Creative Problem-Solving

While these models excel at tasks they’ve been trained for, they don’t demonstrate the kind of novel, creative problem-solving that characterizes human intelligence. True AGI would likely be able to approach entirely new problems with the kind of flexibility and creativity humans possess.

Physical World Interaction

AGI definitions often include the ability to perceive and interact with the physical world. Current models remain fundamentally software systems without direct perception or manipulation capabilities, though they can reason about physical concepts when provided with information.

The Significance of the AGI Debate

Why does it matter whether we call these models AGI? The classification has implications for how we think about AI development, regulation, and future prospects:

Development Trajectory

If o3 represents early AGI, it suggests we’re further along the development curve than many expected. This could accelerate investment and research in the field and shift focus toward making these systems more reliable rather than more capable.

Regulatory Considerations

The AGI classification could influence regulatory approaches. Systems classified as AGI might face different regulatory frameworks than narrower AI systems.

Economic and Social Impact

If we’re entering an era where AI systems can perform at or above human level across many intellectual domains, the economic and social implications could be profound, affecting everything from knowledge work to education systems.

The Bottom Line

Whether or not o3 and o4 Mini constitute “AGI” might be less important than recognizing that they represent a significant advancement in AI capabilities. As Tyler Cowen noted, “AGI is not much of a social event per se” – meaning the transition to AGI might be gradual rather than sudden.

What’s clear is that these models demonstrate capabilities that push the boundaries of what AI systems can do. They combine reasoning, visual understanding, and knowledge in ways that previous models couldn’t. They can solve problems across multiple domains with impressive accuracy. And they make us question where exactly the line between narrow AI and AGI lies.

The debate about whether OpenAI “basically dropped AGI” will likely continue. But regardless of terminology, these models represent an important milestone in AI development—one that brings us closer to systems that can reason across domains in ways that meaningfully resemble human intelligence.