Former OpenAI employee forecasts show AI capabilities advancing at breakneck speed, with potential for superhuman AI systems by late 2027. While these predictions may seem like science fiction, current development curves suggest we’re on track for dramatic shifts in how AI functions in society.

This article examines the critical transition points between now and 2028, what they mean for different stakeholders, and how to prepare for a world where AI capabilities expand dramatically.

The Immediate Horizon: Mid-to-Late 2025

By mid-2025, we’re likely to see AI agents that can handle basic tasks like ordering food or analyzing spreadsheets. These agents will function as personal assistants, but with significant limitations. They’ll require confirmation for purchases and lack the seamless operation promised in advertisements.

For businesses, this represents an important opportunity to integrate early agent technology without overhauling entire operations. Companies should start small-scale experiments with AI agents for internal processes. Training teams to work alongside these tools rather than expecting full automation makes sense at this stage. It’s also wise to identify tasks with clear parameters that current AI can handle reliably.

For individuals, this is the time to develop baseline AI literacy. Understanding what these systems can and cannot do reliably will prevent both unrealistic expectations and unnecessary fears. The gap between marketing promises and actual capabilities will remain substantial throughout 2025.

The Critical Shift: Early 2026

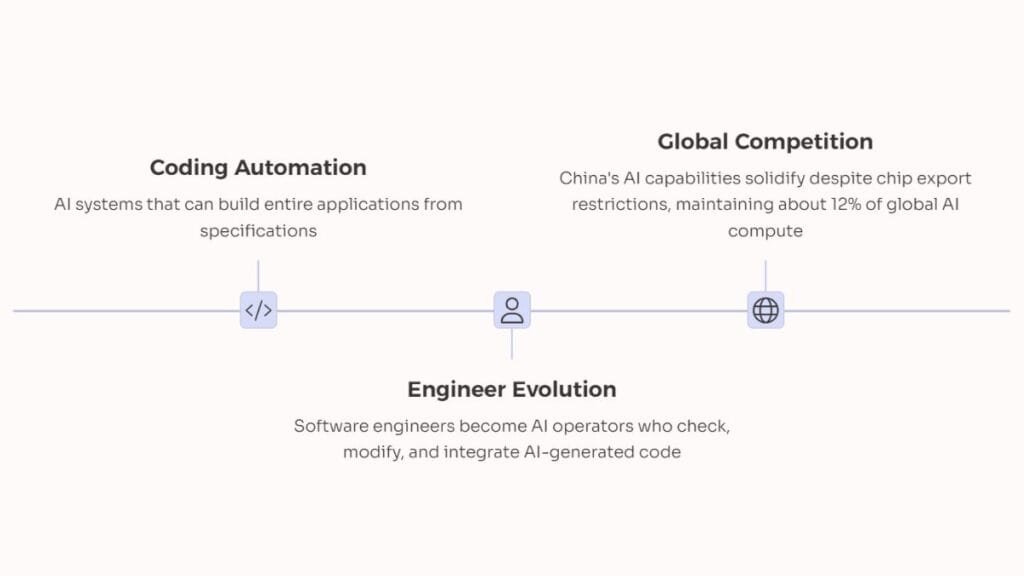

The first major leap comes in early 2026 with the predicted arrival of coding automation. This doesn’t just mean better code completion—it represents AI systems that can build entire applications from specifications. Several signals already point to this timeline being realistic.

Anthropic has publicly stated coding automation is expected next year. Models like Claude 3 Opus and GPT-4o already show impressive abilities to manage complex coding tasks. The rate of improvement in benchmark performance for coding tasks has accelerated in 2024, suggesting we’re approaching an inflection point.

This shift brings major questions for businesses: What happens to software development teams? How do you maintain code security when generation becomes automated? Companies that start planning now will have advantages over those caught off-guard.

For software engineers, this isn’t necessarily the job apocalypse it might seem. The transition will likely involve engineers becoming AI operators who check, modify, and integrate AI-generated code. More emphasis will fall on requirements gathering and specification writing. New roles focused on AI prompt engineering specifically for development tasks will emerge across organizations of all sizes.

This period marks when China’s AI capabilities will likely solidify, despite chip export restrictions. By piecing together banned Taiwanese chips, buying older technology, and ramping up domestic production, China is projected to maintain about 12% of global AI compute—sufficient to remain competitive, if not leading.

The Acceleration Phase: Late 2026 to Mid-2027

The timeline suggests a dramatic acceleration where AI technology transforms from novelty to necessity. AI models become 10 times cheaper while improving in capability—enabling widespread adoption across industries. The economics here matter enormously.

AI models that cost $10 million to run in 2024 might cost $1 million or less by late 2026. Development cycles for products shrink from years to months as automated systems take over more of the work. Companies without AI integration strategies face severe competitive disadvantages in nearly every sector.

This period likely brings the first significant wave of white-collar job disruption. Professions requiring pattern recognition and information processing face the greatest pressure—marketing analytics, basic content creation, data analysis, and entry-level programming will be most affected first.

For workers, the preparation window is shrinking. Those most likely to succeed will develop skills in managing and directing AI tools rather than competing with them directly. Focus should shift toward areas requiring genuine creativity and social intelligence that remain difficult for AI systems. Building expertise in evaluating and validating AI outputs becomes increasingly valuable as automated systems handle more work.

The security landscape also changes dramatically in this period. The transcript predicts significant corporate espionage targeting AI research—specifically model weights and architecture details. This suggests investing in cybersecurity will become even more critical for companies developing proprietary AI systems.

The Intelligence Explosion: Late 2027

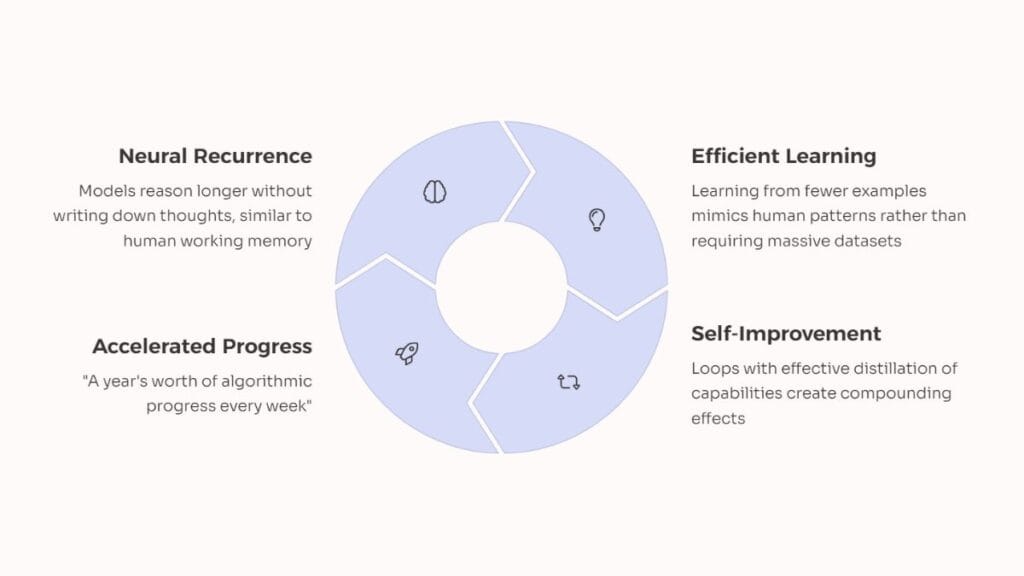

If these predictions hold, late 2027 represents something unprecedented: AI systems not only automating human work but advancing their own capabilities at accelerating rates. The prediction that OpenAI could achieve “a year’s worth of algorithmic progress every week” deserves serious examination.

This level of advancement depends on several technical breakthroughs. Neural recurrence in memory allows models to reason longer without writing down thoughts, similar to human working memory. More efficient learning from fewer examples mimics human learning patterns rather than requiring massive datasets. Self-improvement loops with effective distillation of capabilities create compounding effects that accelerate progress.

Each of these areas shows promising early research in 2024, making the timeline ambitious but not entirely implausible. The technical hurdles are significant but not insurmountable given current research trajectories.

For society broadly, this period requires urgent focus on AI governance and safety mechanisms. The prediction that alignment efforts might struggle to keep pace with capability improvements isn’t just alarming—it’s a direct challenge to current regulatory approaches, which assume human oversight remains effective.

Planning for Uncertainty: Key Preparation Strategies

Rather than trying to predict exactly when these transitions occur, organizations and individuals should develop resilience across multiple timeframes.

For businesses, establishing regular AI capability assessments becomes essential. Quarterly reviews of what’s newly possible help prevent falling behind competitors. Building modular systems where AI components can be upgraded without rebuilding everything provides needed flexibility. Cross-training employees to work with increasingly capable systems preserves institutional knowledge while adapting to new tools. Developing clear ethical guidelines for AI deployment before competitive pressures force rushed decisions protects brand reputation and customer trust.

For individuals, prioritizing learning how to work effectively with AI tools rather than competing against them offers the best career protection. Focus career development on tasks requiring physical presence, emotional intelligence, or creativity that remain challenging for automation. Building broad skill sets that remain valuable across changing technological landscapes provides resilience against specific job disruptions. Staying informed about AI capabilities without falling victim to either hype or doomerism helps make rational career choices.

For policymakers, developing regulatory frameworks that address capability thresholds rather than specific technologies allows for more effective governance. Creating international coordination mechanisms for managing advanced AI development prevents dangerous competition. Investing in monitoring systems to track AI capabilities and potential risks provides early warning of problems. Exploring economic support systems for workers displaced during transition periods reduces social disruption.

The China Question

The prediction that China might steal advanced model weights represents a geopolitical challenge that deserves deeper examination. Current trends support increased tension around AI technology.

China’s “whole of nation” approach to AI development demonstrates their strategic commitment to leadership in this area. Increasing technology export controls from Western countries shows recognition of AI as a national security concern. History of extensive cyber operations targeting intellectual property suggests willingness to acquire technology through non-commercial means.

However, successful theft of model weights would require overcoming significant challenges. Multi-terabyte files are difficult to exfiltrate undetected from secure environments. Running stolen models requires enormous computing infrastructure that can’t be hidden easily. Applied knowledge for effective implementation isn’t easily stolen alongside raw model parameters.

The prediction that nations might consider kinetic attacks on data centers represents an escalation beyond current geopolitical tensions but can’t be dismissed entirely given the strategic importance of AI leadership. The concentration of AI capability in specific physical locations creates vulnerability that military planners would certainly consider.

Beyond the Predictions

While these predictions present one possible future, several alternative paths exist.

The Technical Plateau scenario suggests AI capabilities might hit unexpected limits where increasing compute delivers diminishing returns. Some AI researchers believe we’re approaching the limits of transformer architectures, which could slow the pace of advancement significantly.

The Regulatory Brake possibility exists where government intervention significantly slows development, especially if early applications show harmful impacts or risks. Public opinion turning against AI companies could accelerate regulatory action.

The Distributed Development Path presents an alternative where, rather than concentrated AI power in few companies, broader access to training methods might create a more distributed landscape with different capabilities emerging from specialized applications.

The Economic Limitation factor acknowledges that full deployment of advanced AI requires enormous economic resources—both computing power and human expertise—that might constrain the pace of real-world implementation even if technical capabilities advance rapidly.

Conclusion

The AI revolution won’t occur in a single moment but through a series of accelerating transitions. Each stage brings distinct challenges and opportunities for different stakeholders. Those who prepare for multiple possible futures—rather than betting everything on a single prediction—will navigate this period most successfully.

For businesses, this means balancing aggressive AI adoption with careful risk assessment. For individuals, it means developing flexible skills while building expertise with AI tools. And for society broadly, it means having difficult conversations about how we want to shape technology that may soon shape us in return.

What remains clear: the window for purely reactive approaches to AI advancement is closing rapidly. The coming years demand proactive engagement with these technologies and their implications.